作业:

1、部署kubernetes集群

2、运行起Nginx,确保能正常访问作业:

前提:重新部署Kubernetes,将网络插件切换为calico;

1、手动配置Pod,通过环境变量生成默认配置;

mydb

打上一个标签

wordpress

打上一个标签

额外为mydb和wordpress分别创建一个Service资源;

mydb的客户端只是wordpress,类型使用ClusterIP

kubectl create service clusterip mydb --tcp=3306:3306 --dry-run=client -o yaml

wordpress的客户端可能来自于集群外部,类型要使用NodePort

kubectl create service nodeport wordpress --tcp=80:80 --dry-run=client -o yaml

2、尝试为mydb和wordpress分别添加livenessProbe和readinessProbe,并测试其效果;

3、尝试为mydb和wordpress分别添加资源需求和资源限制;MySQL和WordPress挂载NFS卷

配置NFS服务器

#安装服务端

[root@k8s-Ansible ~]#apt -y install nfs-kernel-server

[root@k8s-Ansible ~]#mkdir /data/mysql

[root@k8s-Ansible ~]#mkdir /data/wordpress

[root@k8s-Ansible ~]#vim /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/mysql 10.0.0.0/24(rw,no_subtree_check,no_root_squash)

/data/wordpress 10.0.0.0/24(rw,no_subtree_check,no_root_squash)

[root@k8s-Ansible ~]#exportfs -r

[root@k8s-Ansible ~]#exportfs -v

/data/mysql 10.0.0.0/24(rw,wdelay,no_root_squash,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

/data/wordpress 10.0.0.0/24(rw,wdelay,no_root_squash,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

#通过ansible在每个节点安装客户端

[root@k8s-Ansible ansible]#vim install_nfs_common.yml

---

- name: intallnfs

hosts: all

tasks:

- name: apt

apt:

name: nfs-common

state: present

[root@k8s-Ansible ansible]#ansible-playbook install_nfs_common.yml

PLAY [intallnfs] **************************************************************************************

TASK [Gathering Facts] ********************************************************************************

ok: [10.0.0.205]

ok: [10.0.0.204]

ok: [10.0.0.202]

ok: [10.0.0.203]

ok: [10.0.0.201]

ok: [10.0.0.207]

ok: [10.0.0.206]

TASK [apt] ********************************************************************************************

ok: [10.0.0.202]

changed: [10.0.0.205]

ok: [10.0.0.207]

changed: [10.0.0.206]

changed: [10.0.0.204]

changed: [10.0.0.203]

changed: [10.0.0.201]

PLAY RECAP ********************************************************************************************

10.0.0.201 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.202 : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.203 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.204 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.205 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.206 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.207 : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Pod: mydb

添加NFS卷,mysql把数据存储在卷上,/var/lib/mysql/

[root@k8s-Master-01 test]#cat mysql/01-service-mydb.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: mydb

name: mydb

spec:

ports:

- name: 3306-3306

port: 3306

protocol: TCP

targetPort: 3306

selector:

app: mydb

type: ClusterIP

[root@k8s-Master-01 test]#cat mysql/02-pod-mydb.yaml

apiVersion: v1

kind: Pod

metadata:

name: mydb

namespace: default

labels:

app: mydb

spec:

containers:

- name: mysql

image: mysql:8.0

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: wpdb

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/lib/mysql

name: nfs-volume-mysql

volumes:

- name: nfs-volume-mysql

nfs:

server: 10.0.0.207

path: /data/mysql

readOnly: false

[root@k8s-Master-01 test]#kubectl apply -f mysql/

Pod:wordpress

添加NFS卷,mysql把数据存储在卷上,/var/www/html/

[root@k8s-Master-01 test]#cat wordpress/01-service-wordpress.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: wordpress

name: wordpress

spec:

ports:

- name: 80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: wordpress

type: NodePort

[root@k8s-Master-01 test]#cat wordpress/02-pod-wordpress.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:6.1-apache

env:

- name: WORDPRESS_DB_HOST

value: mydb

- name: WORDPRESS_DB_NAME

value: wpdb

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/www/html

name: nfs-volume-wordpress

volumes:

- name: nfs-volume-wordpress

nfs:

server: 10.0.0.207

path: /data/wordpress

readOnly: false

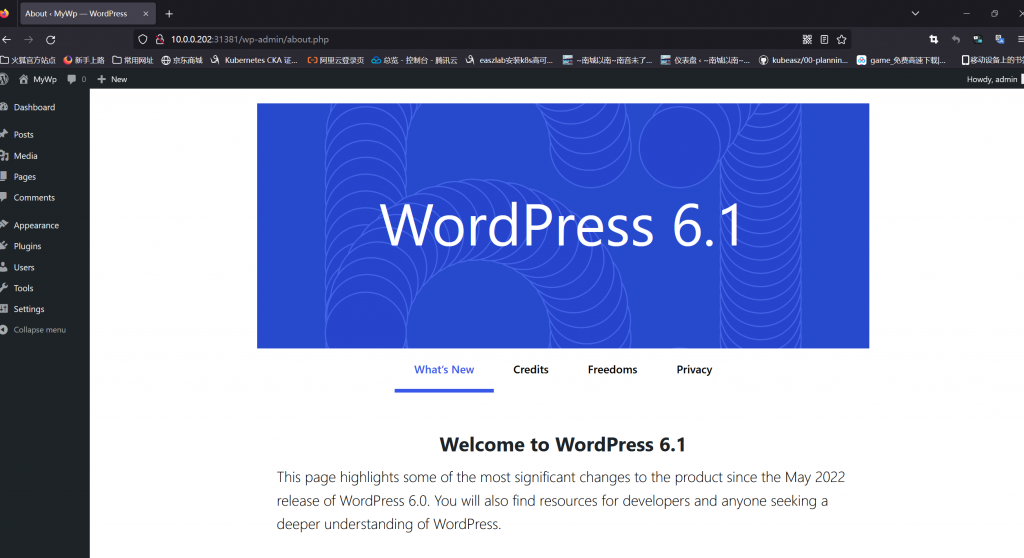

[root@k8s-Master-01 test]#kubectl apply -f wordpress/验证

#节点Pods运行正常

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d13h

mydb ClusterIP 10.103.126.185 <none> 3306/TCP 16m

wordpress NodePort 10.106.191.44 <none> 80:31381/TCP 25m

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb 1/1 Running 0 7m9s

wordpress 1/1 Running 0 5m54s

#NFS服务器验证

[root@k8s-Ansible data]#tree mysql/ wordpress/ -L 1

mysql/

├── auto.cnf

├── binlog.000001

├── binlog.index

├── ca-key.pem

├── ca.pem

├── client-cert.pem

├── client-key.pem

├── #ib_16384_0.dblwr

├── #ib_16384_1.dblwr

├── ib_buffer_pool

├── ibdata1

├── ibtmp1

├── #innodb_redo

├── #innodb_temp

├── mysql

├── mysql.ibd

├── mysql.sock -> /var/run/mysqld/mysqld.sock

├── performance_schema

├── private_key.pem

├── public_key.pem

├── server-cert.pem

├── server-key.pem

├── sys

├── undo_001

└── undo_002

wordpress/

├── wp-admin

├── wp-includes

└── wp-trackback.php

MySQL和WordPress添加PVC卷

Pod: mysql

/var/lib/mysql/,静态制备pv

[root@k8s-Master-01 test]#cat mysql/01-service-mydb.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: mydb

name: mydb

spec:

ports:

- name: 3306-3306

port: 3306

protocol: TCP

targetPort: 3306

selector:

app: mydb

type: ClusterIP

[root@k8s-Master-01 test]#cat mysql/02-pod-mydb.yaml

apiVersion: v1

kind: Pod

metadata:

name: mydb

namespace: default

labels:

app: mydb

spec:

containers:

- name: mysql

image: mysql:8.0

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: wpdb

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/lib/mysql

name: nfs-pvc-mydb

volumes:

- name: nfs-pvc-mydb

persistentVolumeClaim:

claimName: pvc-mydb

[root@k8s-Master-01 test]#cat mysql/03-pv-mydb.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-mydb

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: "/data/mysql"

server: 10.0.0.207

[root@k8s-Master-01 test]#cat mysql/04-pvc-mydb.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-mydb

namespace: default

spec:

accessModes: ["ReadWriteMany"]

volumeMode: Filesystem

resources:

requests:

storage: 3Gi

limits:

storage: 10Gi

[root@k8s-Master-01 test]#kubectl apply -f mysql/

service/mydb created

pod/mydb created

persistentvolume/pv-nfs-mydb created

persistentvolumeclaim/pvc-mydb created

[root@k8s-Master-01 test]#kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-mydb 5Gi RWX Retain Bound default/pvc-mydb 19s

[root@k8s-Master-01 test]#kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-mydb Bound pv-nfs-mydb 5Gi RWX 21s

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb 1/1 Running 0 25sPod: wordpress

/var/www/html/,动态制备pv

#Set up a NFS Server on a Kubernetes cluster

[root@k8s-Master-01 test]#kubectl create namespace nfs

namespace/nfs created

[root@k8s-Master-01 test]#kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/master/deploy/example/nfs-provisioner/nfs-server.yaml --namespace nfs

service/nfs-server created

deployment.apps/nfs-server created

[root@k8s-Master-01 test]#kubectl get ns

NAME STATUS AGE

default Active 2d13h

demo Active 2d2h

kube-node-lease Active 2d13h

kube-public Active 2d13h

kube-system Active 2d13h

nfs Active 101s

[root@k8s-Master-01 test]#kubectl get svc -n nfs -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nfs-server ClusterIP 10.102.79.4 <none> 2049/TCP,111/UDP 2m45s app=nfs-server

[root@k8s-Master-01 test]#kubectl get pods -n nfs -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-server-5847b99d99-77bgq 1/1 Running 0 2m49s 192.168.127.5 k8s-node-01 <none> <none>

#Install NFS CSI driver v3.1.0 version on a kubernetes cluster

[root@k8s-Master-01 test]#curl -skSL https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/v3.1.0/deploy/install-driver.sh | bash -s v3.1.0 -

Installing NFS CSI driver, version: v3.1.0 ...

serviceaccount/csi-nfs-controller-sa created

clusterrole.rbac.authorization.k8s.io/nfs-external-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-provisioner-binding created

csidriver.storage.k8s.io/nfs.csi.k8s.io created

deployment.apps/csi-nfs-controller created

daemonset.apps/csi-nfs-node created

NFS CSI driver installed successfully.

#检查启动情况,发现镜像下载不到

[root@k8s-Master-01 test]#kubectl -n kube-system get pod -o wide -l 'app in (csi-nfs-node,csi-nfs-controller)'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-nfs-controller-65cf7d587-72ggp 1/3 ErrImagePull 0 109s 10.0.0.205 k8s-node-02 <none> <none>

csi-nfs-controller-65cf7d587-bzc6z 0/3 ContainerCreating 0 109s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-dsgzn 0/3 ContainerCreating 0 62s 10.0.0.206 k8s-node-03 <none> <none>

csi-nfs-node-fn56s 1/3 ErrImagePull 0 62s 10.0.0.205 k8s-node-02 <none> <none>

csi-nfs-node-hmbnr 0/3 ContainerCreating 0 63s 10.0.0.201 k8s-master-01 <none> <none>

csi-nfs-node-nrjns 0/3 ErrImagePull 0 63s 10.0.0.203 k8s-master-03 <none> <none>

csi-nfs-node-zn59h 0/3 ContainerCreating 0 62s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-zngz6 0/3 ContainerCreating 0 63s 10.0.0.202 k8s-master-02 <none> <none>

#手动上传镜像

[root@k8s-Ansible ansible]#vim nfs-images.yml

---

- name: images

hosts: all

tasks:

- name: copy

copy:

src: nfs-csi.tar

dest: /root/nfs-csi.tar

- name: shell

shell: docker image load -i nfs-csi.tar

[root@k8s-Ansible ansible]#ansible-playbook nfs-images.yml

[root@k8s-Ansible ansible]#ansible-playbook nfs-images.yml

PLAY [images] *****************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************

ok: [10.0.0.204]

ok: [10.0.0.202]

ok: [10.0.0.205]

ok: [10.0.0.201]

ok: [10.0.0.203]

ok: [10.0.0.207]

ok: [10.0.0.206]

TASK [copy] *******************************************************************************************************

changed: [10.0.0.205]

changed: [10.0.0.204]

changed: [10.0.0.201]

changed: [10.0.0.203]

changed: [10.0.0.202]

changed: [10.0.0.206]

changed: [10.0.0.207]

TASK [shell] ******************************************************************************************************

changed: [10.0.0.205]

changed: [10.0.0.201]

changed: [10.0.0.204]

changed: [10.0.0.203]

changed: [10.0.0.202]

changed: [10.0.0.206]

changed: [10.0.0.207]

PLAY RECAP ********************************************************************************************************

10.0.0.201 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.202 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.203 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.204 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.205 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.206 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.207 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[root@k8s-Master-01 test]#kubectl -n kube-system get pod -o wide -l 'app in (csi-nfs-node,csi-nfs-controller)'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-nfs-controller-65cf7d587-72ggp 3/3 Running 2 (2m9s ago) 9m9s 10.0.0.205 k8s-node-02 <none> <none>

csi-nfs-controller-65cf7d587-bzc6z 3/3 Running 2 (69s ago) 9m9s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-dsgzn 3/3 Running 2 (80s ago) 8m22s 10.0.0.206 k8s-node-03 <none> <none>

csi-nfs-node-fn56s 3/3 Running 2 (110s ago) 8m22s 10.0.0.205 k8s-node-02 <none> <none>

csi-nfs-node-hmbnr 3/3 Running 0 8m23s 10.0.0.201 k8s-master-01 <none> <none>

csi-nfs-node-nrjns 3/3 Running 1 (3m52s ago) 8m23s 10.0.0.203 k8s-master-03 <none> <none>

csi-nfs-node-zn59h 3/3 Running 1 (3m21s ago) 8m22s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-zngz6 3/3 Running 2 (81s ago) 8m23s 10.0.0.202 k8s-master-02 <none> <none>

#Storage Class Usage (Dynamic Provisioning)

[root@k8s-Master-01 sc-pvc]#vim 01-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:

#server: nfs-server.default.svc.cluster.local

server: nfs-server.nfs.svc.cluster.local

share: /

#reclaimPolicy: Delete

reclaimPolicy: Retain

volumeBindingMode: Immediate

mountOptions:

- hard

- nfsvers=4.1

#create PVC

[root@k8s-Master-01 sc-pvc]#vim 02-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-sc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: nfs-csi

[root@k8s-Master-01 sc-pvc]#kubectl apply -f 01-sc.yaml

storageclass.storage.k8s.io/nfs-csi created

[root@k8s-Master-01 sc-pvc]#kubectl apply -f 02-pvc.yaml

persistentvolumeclaim/pvc-nfs-sc created

[root@k8s-Master-01 sc-pvc]#kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-csi nfs.csi.k8s.io Retain Immediate false 90s

[root@k8s-Master-01 sc-pvc]#kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-mydb Bound pv-nfs-mydb 5Gi RWX 36m

pvc-nfs-sc Bound pvc-7fd8c8e0-82fc-4beb-b51b-0343514cf6c4 10Gi RWX nfs-csi 87s

[root@k8s-Master-01 sc-pvc]#kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-mydb 5Gi RWX Retain Bound default/pvc-mydb 38m

pvc-7fd8c8e0-82fc-4beb-b51b-0343514cf6c4 10Gi RWX Retain Bound default/pvc-nfs-sc nfs-csi 2m39s

[root@k8s-Master-01 test]#cat wordpress/02-pod-wordpress.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:6.1-apache

env:

- name: WORDPRESS_DB_HOST

value: mydb

- name: WORDPRESS_DB_NAME

value: wpdb

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/www/html

name: nfs-pvc-wp

volumes:

- name: nfs-pvc-wp

persistentVolumeClaim:

claimName: pvc-nfs-sc

[root@k8s-Master-01 test]#kubectl apply -f wordpress/

[root@k8s-Master-01 chapter5]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb 1/1 Running 0 46m

wordpress 1/1 Running 0 29s

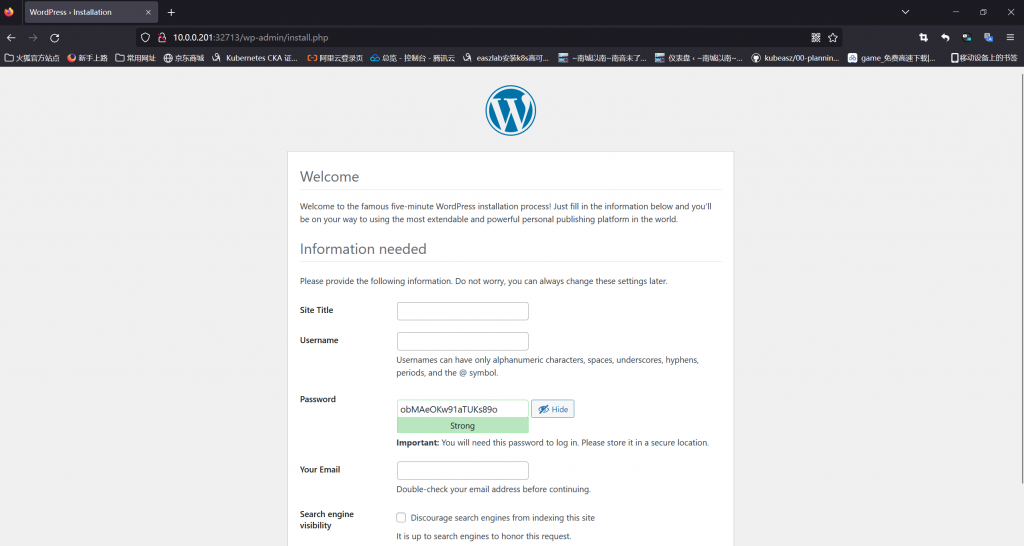

[root@k8s-Master-01 chapter5]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d14h

mydb ClusterIP 10.106.130.102 <none> 3306/TCP 54m

wordpress NodePort 10.110.182.248 <none> 80:32713/TCP 41s

验证

[root@k8s-Ansible data]#ll mysql/

总用量 99680

drwxr-xr-x 8 systemd-coredump root 4096 11月 11 12:09 ./

drwxr-xr-x 5 root root 4096 11月 11 12:07 ../

-rw-r----- 1 systemd-coredump systemd-coredump 56 11月 11 12:07 auto.cnf

-rw-r----- 1 systemd-coredump systemd-coredump 3024100 11月 11 12:09 binlog.000001

-rw-r----- 1 systemd-coredump systemd-coredump 157 11月 11 12:09 binlog.000002

-rw-r----- 1 systemd-coredump systemd-coredump 32 11月 11 12:09 binlog.index

-rw------- 1 systemd-coredump systemd-coredump 1680 11月 11 12:07 ca-key.pem

-rw-r--r-- 1 systemd-coredump systemd-coredump 1112 11月 11 12:07 ca.pem

-rw-r--r-- 1 systemd-coredump systemd-coredump 1112 11月 11 12:07 client-cert.pem

-rw------- 1 systemd-coredump systemd-coredump 1676 11月 11 12:07 client-key.pem

-rw-r----- 1 systemd-coredump systemd-coredump 196608 11月 11 12:09 '#ib_16384_0.dblwr'

-rw-r----- 1 systemd-coredump systemd-coredump 8585216 11月 11 12:08 '#ib_16384_1.dblwr'

-rw-r----- 1 systemd-coredump systemd-coredump 5711 11月 11 12:09 ib_buffer_pool

-rw-r----- 1 systemd-coredump systemd-coredump 12582912 11月 11 12:09 ibdata1

-rw-r----- 1 systemd-coredump systemd-coredump 12582912 11月 11 12:10 ibtmp1

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:09 '#innodb_redo'/

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:09 '#innodb_temp'/

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:07 mysql/

-rw-r----- 1 systemd-coredump systemd-coredump 31457280 11月 11 12:09 mysql.ibd

lrwxrwxrwx 1 systemd-coredump systemd-coredump 27 11月 11 12:08 mysql.sock -> /var/run/mysqld/mysqld.sock

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:07 performance_schema/

-rw------- 1 systemd-coredump systemd-coredump 1676 11月 11 12:07 private_key.pem

-rw-r--r-- 1 systemd-coredump systemd-coredump 452 11月 11 12:07 public_key.pem

-rw-r--r-- 1 systemd-coredump systemd-coredump 1112 11月 11 12:07 server-cert.pem

-rw------- 1 systemd-coredump systemd-coredump 1676 11月 11 12:07 server-key.pem

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:08 sys/

-rw-r----- 1 systemd-coredump systemd-coredump 16777216 11月 11 12:09 undo_001

-rw-r----- 1 systemd-coredump systemd-coredump 16777216 11月 11 12:09 undo_002

drwxr-x--- 2 systemd-coredump systemd-coredump 4096 11月 11 12:09 wpdb/

[root@k8s-Ansible data]#ll wordpress/

总用量 8

drwxr-xr-x 2 root root 4096 11月 11 12:07 ./

drwxr-xr-x 5 root root 4096 11月 11 12:07 ../

基于secret引用的方式,为MySQL和WordPress环境变量提供值

[root@k8s-Master-01 test]#kubectl create secret generic mysql-secret --from-literal=root.pass=654321 --from-literal=db.name=wpdb --from-literal=db.user.name=wpuser --from-literal=db.user.pass=123456 --dry-run=client -o yaml > secret.yaml

[root@k8s-Master-01 test]#cat secret.yaml

apiVersion: v1

data:

db.name: d3BkYg==

db.user.name: d3B1c2Vy

db.user.pass: MTIzNDU2

root.pass: NjU0MzIx

kind: Secret

metadata:

creationTimestamp: null

name: mysql-secret

[root@k8s-Master-01 test]#kubectl apply -f secret.yaml

secret/mysql-secret created

[root@k8s-Master-01 test]#kubectl get secret

NAME TYPE DATA AGE

mysql-secret Opaque 4 14s

[root@k8s-Master-01 test]#cat mysql/02-pod-mydb.yaml

apiVersion: v1

kind: Pod

metadata:

name: mydb

namespace: default

labels:

app: mydb

spec:

containers:

- name: mysql

image: mysql:8.0

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: root.pass

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.name

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.name

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.pass

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/lib/mysql

name: nfs-pvc-mydb

volumes:

- name: nfs-pvc-mydb

persistentVolumeClaim:

claimName: pvc-mydb

[root@k8s-Master-01 test]#cat wordpress/02-pod-wordpress.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:6.1-apache

env:

- name: WORDPRESS_DB_HOST

value: mydb

- name: WORDPRESS_DB_NAME

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.name

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.name

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.pass

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/www/html

name: nfs-pvc-wp

volumes:

- name: nfs-pvc-wp

persistentVolumeClaim:

claimName: pvc-nfs-sc

[root@k8s-Master-01 test]#kubectl apply -f mysql/

service/mydb configured

pod/mydb created

persistentvolume/pv-nfs-mydb unchanged

persistentvolumeclaim/pvc-mydb unchanged

[root@k8s-Master-01 test]#kubectl apply -f wordpress/

service/wordpress configured

pod/wordpress created

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb 1/1 Running 0 4m12s

wordpress 1/1 Running 0 37s

[root@k8s-Master-01 test]#

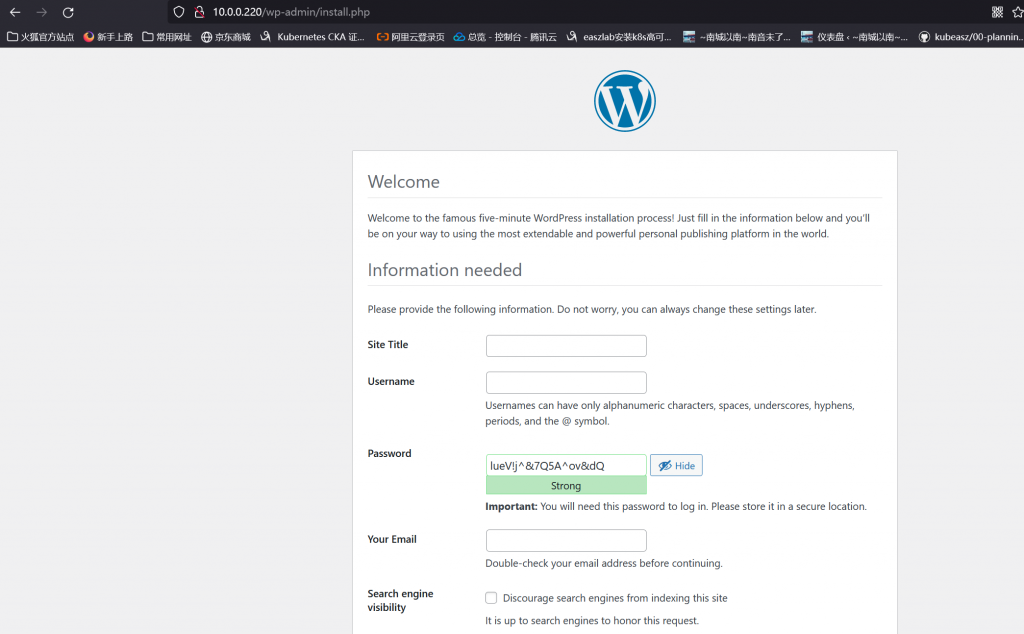

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d16h

mydb ClusterIP 10.106.130.102 <none> 3306/TCP 174m

wordpress NodePort 10.110.182.248 <none> 80:32713/TCP 121m

创建运行一个nginx Pod

该Pod从Secret卷加载证书和私钥,从ConfigMap卷加载配置信息

#生成secret文件,注意此处证书www.shuhong.com.pem是由cat www.shuhong.com.crt ca.crt > www.shuhong.com.pem合成的

[root@k8s-Master-01 ssl-nginx]#kubectl create secret tls nginx-certs --cert=certs.d/www.shuhong.com.pem --key=certs.d/www.shuhong.com.key --dry-run=client -o yaml > 02-secret-ssl.yaml

#生成configmap文件

[root@k8s-Master-01 ssl-nginx]#kubectl create configmap nginx-sslvhosts-confs --from-file=nginx-ssl-conf.d/ --dry-run=client -o yaml > 03-configmap-nginx.yaml

[root@k8s-Master-01 ssl-nginx]#tree

.

├── 01-ssl-nginx-pod.yaml

├── 02-secret-ssl.yaml

├── 03-configmap-nginx.yaml

├── certs.d

│ ├── ca.crt

│ ├── ca.key

│ ├── ca.srl

│ ├── crts.sh

│ ├── v3.ext

│ ├── www.shuhong.com.crt

│ ├── www.shuhong.com.csr

│ ├── www.shuhong.com.key

│ └── www.shuhong.com.pem

└── nginx-ssl-conf.d

├── myserver.conf

├── myserver-gzip.cfg

└── myserver-status.cfg

[root@k8s-Master-01 ssl-nginx]#cat 01-ssl-nginx-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: ssl-nginx

namespace: default

spec:

containers:

- image: nginx:alpine

name: ngxserver

volumeMounts:

- name: nginxcerts

mountPath: /etc/nginx/certs/

readOnly: true

- name: nginxconf

mountPath: /etc/nginx/conf.d/

readOnly: true

volumes:

- name: nginxcerts

secret:

secretName: nginx-certs

- name: nginxconf

configMap:

name: nginx-sslvhosts-confs

optional: false

[root@k8s-Master-01 ssl-nginx]#cat 02-secret-ssl.yaml

apiVersion: v1

data:

tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUdDVENDQS9HZ0F3SUJBZ0lVREpJM3Axd0k2L2tOYzNzOVRPazNYSmFZSEI4d0RRWUpLb1pJaHZjTkFRRU4KQlFBd2NERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdNQjBKbGFXcHBibWN4RURBT0JnTlZCQWNNQjBKbAphV3BwYm1jeEVEQU9CZ05WQkFvTUIyVjRZVzF3YkdVeEVUQVBCZ05WQkFzTUNGQmxjbk52Ym1Gc01SZ3dGZ1lEClZRUUREQTkzZDNjdWMyaDFhRzl1Wnk1amIyMHdIaGNOTWpJeE1URXhNRFl6TlRBNVdoY05Nekl4TVRBNE1EWXoKTlRBNVdqQndNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0F3SFFtVnBhbWx1WnpFUU1BNEdBMVVFQnd3SApRbVZwYW1sdVp6RVFNQTRHQTFVRUNnd0haWGhoYlhCc1pURVJNQThHQTFVRUN3d0lVR1Z5YzI5dVlXd3hHREFXCkJnTlZCQU1NRDNkM2R5NXphSFZvYjI1bkxtTnZiVENDQWlJd0RRWUpLb1pJaHZjTkFRRUJCUUFEZ2dJUEFEQ0MKQWdvQ2dnSUJBTTV0Z0I4KzZDM29YSDNQL3BQaDErRlRmbDdITDMxTmFWNC9rdVg0VzAxNFJ1YkcxTFUxVmFoZwpRK3NadHByeFRKUjliMlZWQm8vQ1lpUDRxR3MxNDh2dDJuZ0s4Z1RyZFZ3TnpIaEtPWC9tOUc0bFVRcFRCODk3CnNCVk1xWGZWZ2p4SENwOXJIbFhEZzYxRkxLa2FWVWgxNDloZXA3VzV3RFJxMk9iREZSNjltNzdmRXdvUStZZk8KeDI5QjludGZPZklpYzJBZzJSUUlwQldOcCtISWE3RlBwSUNOL1BuWTFqWDJCT09FSi9UT2oxSElMdU1CV0VMagpjR3RKclYvaUhPLzQ4aE1rOTZrMkp4RWovZnFPeGJrMjc1eENOYUxwRGNWeEtXRzZkcjd5Ry9WaFhSdFduMkp5CnliR0RieU8vM2QyNzRrTklKL3hsM0RnUXI1cHd0Zjg5N3RQNmJrd2lDQnZycnBBVzd4RnRJQXMxSnQ0WjRsSE4Ka2pPKy9YRGV2YjBpNTNkUXNTT1p1MWJNRlNQYVdKT0pNazg3a0pyTyt5aFAvUEdPdWhEU0hERHZ6YWxnVTFqYQpDaWVGKzJqQkg4UGRmRFJSMXAxcFZXZkNlZ0xTdjVoZFh2NXFCMkxGaytNUVFVUFE3cXNCR0Q2dEtlQ3k4Z2pOClBEOXVkenBwQ0VuUEcvTjhrWGRPQlVLZGJJajRhK0pJUy9WOEN0SWFGb3dwd3pma0s2Vlp6d2hYbWRzZ2ovWU4KUlJiQnBhQTdEZjd6K3UxNnlFa044RTZONko3blZMcVBSVUhEOWN5N1NsSUVhR2c2aXFxVFJ4TGxSTnE4UUhwTgpnSjdPZjhKTC9oNFhMZDhDNExlYzBYdHh0QjB0YkFrODE4WTM2TVlQVEE3VE9oUnRYaTluQWdNQkFBR2pnWm93CmdaY3dId1lEVlIwakJCZ3dGb0FVcGhPMlg2ODBSN21KREZVQW5ZUVA4TENMRkR3d0NRWURWUjBUQkFJd0FEQUwKQmdOVkhROEVCQU1DQlBBd0V3WURWUjBsQkF3d0NnWUlLd1lCQlFVSEF3RXdSd1lEVlIwUkJFQXdQb0lQZDNkMwpMbk5vZFdodmJtY3VZMjl0Z2hab1lYSmliM0l1ZDNkM0xuTm9kV2h2Ym1jdVkyOXRnaE4zZDNjdWQzZDNMbk5vCmRXaHZibWN1WTI5dE1BMEdDU3FHU0liM0RRRUJEUVVBQTRJQ0FRREluQzQ0NlR1N0YvM2IwOXNPV0NXVUVmMVoKRVc2NG1XMGcrbkpCRktHSE5DYWtQSVRyeXZnKzZNTGdYUndaK1pwR0ppc3RGVUJCeDZZM2ZCbjNva01tOVM5MAovYVpwNlpaam0xSFNmQ0JWZlcyaExCR1RLUkNiOGNhL3ZWOG15LzRNSGNzMjJzQUpjYUFVWWZmeEdJOHFGVDlnCm40ZUdlQzNKMUIvSWtPSDY2ckFvZUF5TTJ0RmN2MFJhakpHWGVaZG01T1IydkZieG83SG1QblBNa0Q0YjEzTysKbnppVW1wY2lZenl2Um05dys3VWhZb2pNTHN4Y1JYVTVVZ0RSVXZyM05nT3RDaVpNa0VyK2pyamx4M21BM3RvLworTmFkUFRucFVMOS9rNFhDYzBKVVNJekgrZHlGcU00TTAvZGFSVHlyWG1QNGR4aGNoOXVqeEdTSGx4WW10ZjEvClRqVXRIaVBJbTBrdk9sTG5xQUcvZ0toRk53N25WQ2pMTFBhM3dUWjhKVVkrQ25aeWJ3NWFrb0puZWdHbjRoM2oKdDdWaDVHSXhmV0NJdDFYMGtqajZaQ1MwZzNEQlRDK0ZLaWU1V0RGdDRwSUJRUzlDeGZlelRacEN2NDZCcFRVMQo3UjhaMk03RHUvaUhaZnZNUk4rZStHaCtnMjZZckp0eTFWWitWeUorRnQ4anJMakdXK3BBM0tUZDFqajhDbjFpCk85YWltTUFGSDVBYTFlSkZyL2JEeDNXYTdQTU5JaGlENHFYUXR5Q2lOT0JnZDNGWjVHYWNlVGE4dkJZaGhuS0sKR280RFZLaWszMGZCcmFPWTRqZ1BDaEVYZjlPV095Qjl0OVZldHdBZDFKek1zT0lpeWZlK0oxY2ZvS2VxWk9veQpzQ1U4cndaVWdNNEx3Q2JaNGc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCi0tLS0tQkVHSU4gQ0VSVElGSUNBVEUtLS0tLQpNSUlGd1RDQ0E2bWdBd0lCQWdJVVZiVjZRdlg4Y3BqOUdPZytpNWxuUGRZWDBLTXdEUVlKS29aSWh2Y05BUUVOCkJRQXdjREVMTUFrR0ExVUVCaE1DUTA0eEVEQU9CZ05WQkFnTUIwSmxhV3BwYm1jeEVEQU9CZ05WQkFjTUIwSmwKYVdwcGJtY3hFREFPQmdOVkJBb01CMlY0WVcxd2JHVXhFVEFQQmdOVkJBc01DRkJsY25OdmJtRnNNUmd3RmdZRApWUVFEREE5M2QzY3VjMmgxYUc5dVp5NWpiMjB3SGhjTk1qSXhNVEV4TURZek5UQTNXaGNOTXpJeE1UQTRNRFl6Ck5UQTNXakJ3TVFzd0NRWURWUVFHRXdKRFRqRVFNQTRHQTFVRUNBd0hRbVZwYW1sdVp6RVFNQTRHQTFVRUJ3d0gKUW1WcGFtbHVaekVRTUE0R0ExVUVDZ3dIWlhoaGJYQnNaVEVSTUE4R0ExVUVDd3dJVUdWeWMyOXVZV3d4R0RBVwpCZ05WQkFNTUQzZDNkeTV6YUhWb2IyNW5MbU52YlRDQ0FpSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnSVBBRENDCkFnb0NnZ0lCQU9nNnhTblhiUDNEYUltNENjZkNIdFN0YUhPRFhtOUpyZG4wdjBpcC82dTBYcFViZ0tJZlZ5VUQKMUQvU0RxRzQ0S3ZOamxxVkpTaUR2QTB0Y3UwWWhIOTJhL2xyaExSNXBabnBMemxKREt5azM3OXlGMU8yNWpkdwprV0JzR3RXN2pUVmYyNldhMUdONkNzOVlkdFRCT05OSzJ2cVJYaVowemFncCtDc2wvVkJNTldXMGYzSUtrWnV0Ck81T1I0YVAyUmJzQUVIOVNVZ0hUNDJkQXIySENWVWxGOW9NSmhlenJ5RGEycjAwWWp1dDBoYmdlYXFLVDlKS3YKa1RKU1hnUVhaTVZDMDgyN2k1dkFIdk5CaHVOekpFQ2x4b000VUlUL0kwMUhjWWx6ejIvNWppeGpRK29uSEVNTApXQ0JmcGxxK1QzcURuRVIwd01pRmc5bURJcW9WZXJmd0pQRVlqOEtFQTFiQisxck1kRWI2RjZHOFN4UGVBZGJHCkNLUGRIRSttZU8zYk5EZDJYZDFYbmVrK2Y2UHVBbzhYWUlvWktJWk9Eai8rdGdWNUdMUWVIODJLd1lFQy9aaUwKWkdmUUJjVjNZaUkvek1VVVY2SXVIN21jUjArOXZXdm9uVnpPcmZFYWNYNUpKb3ZvWnIvUGx2UUR6WmRWZWZsYQpXbFJ2QTZ3ankxQlVoSFBoR0xqb0xNRUNtdVNYOWU2dU5YN0ZFem1oajJTRkwwOE8rbWxkak5seEZJeXpOZEVtCkI4S1lqbFEzZVpoZFFtRjQ3aEVUVG9aTjlGRlV1M1dzbjRySzZOU3IwbXFsTmhCYXppN1VDUUFSdFo3T09weHgKMUttcThrRENJcXdBN1RMbG1zWkFTaEhadHowV0Fkc3RwaSsvL1pEWjFndm9zYldnZ2NSTEFnTUJBQUdqVXpCUgpNQjBHQTFVZERnUVdCQlNtRTdaZnJ6Ukh1WWtNVlFDZGhBL3dzSXNVUERBZkJnTlZIU01FR0RBV2dCU21FN1pmCnJ6Ukh1WWtNVlFDZGhBL3dzSXNVUERBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUEwR0NTcUdTSWIzRFFFQkRRVUEKQTRJQ0FRQjVEY21yTFBINDU3QU5rWSt0Rmp5UUQ5Y0NJb044aFN5NHJ4WDZhSlIyU0FIME15UmROWlJ4Z0c5NQp5aVF2eFZsZkFEeDZMRFRTejUrVW1YVTUzeDczQWZoNnUrNjdhbmtnUXVTa1BGRXlxUmZodjMwWlJhU3ZFUjFuCjhXSDJTVGJaVzJ5Z3NrVUFyYkNGSkNySjM3Q0xqK0dLRHI2N1V2QzNhb2Y2T0dXN2IyYUdERy83MWZWNkNmQ3AKczlMMFNBL21RMmRlbFpDSFV2aU5mZGo2b2JLMm9USlJjd0s3U0M3Mzhhc0RnS0QxV200WUlrL1NHbHU3VEVZMApqRW1nSEdtRW1jamlVRzlpVGk5MG52dFVHRkVMZUs0SGJtRGFFM0VQakxXWEVqZ1lBdzNnYm53VGlwbmpKSFg1Cmt5eHozakNIKzlURXVQMXQ1OGpXOEJyTmExdGo1aHNzNHpVenNFZjNaNnlLVmdqK3ZDVWlYV1ZqSnRqRmNsTEQKdlYzTmlXTVFvMXFyL2xEMTZNT1cxV3dJQUYxYmc2VUNjV0dhOHFyTFY4UVM4djBYT1VLVFBOZ0xPQ1Y3ZFA1cwpLOTc5Z20wclFEUzB4TGZWUEo1aUlhYXNRTlRyMnd4M2NFeDhGUE1rWkZmUTdqZVh6YmVBQmt3WDdINjdEeVl6CkNGVndFdnQxQXVkZy9waUZLbUcrdnhEUFpHVHY5dU9KWkFrL2N3SVlwWjZ1MmFFaTAyRllPbFNzWTYzNlZKRE0KL25CL2t6akZOQUJERmNnOGVnYTZlS053RVVzVkNJdGJtU05xRGFBOTczMmlOcnJNMUcrWUZUNDFiTmF3N0syZQpUME9KM0hpNnU4ZERsMGQ2S21DWlFXTnlKR0JGbEN0TTNUbk5qcUlOeXpBWEU4d2oyZz09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

tls.key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlKS2dJQkFBS0NBZ0VBem0yQUh6N29MZWhjZmMvK2srSFg0Vk4rWHNjdmZVMXBYaitTNWZoYlRYaEc1c2JVCnRUVlZxR0JENnhtMm12Rk1sSDF2WlZVR2o4SmlJL2lvYXpYankrM2FlQXJ5Qk90MVhBM01lRW81ZitiMGJpVlIKQ2xNSHozdXdGVXlwZDlXQ1BFY0tuMnNlVmNPRHJVVXNxUnBWU0hYajJGNm50Ym5BTkdyWTVzTVZIcjJidnQ4VApDaEQ1aDg3SGIwSDJlMTg1OGlKellDRFpGQWlrRlkybjRjaHJzVStrZ0kzOCtkaldOZllFNDRRbjlNNlBVY2d1CjR3RllRdU53YTBtdFgrSWM3L2p5RXlUM3FUWW5FU1A5K283RnVUYnZuRUkxb3VrTnhYRXBZYnAydnZJYjlXRmQKRzFhZlluTEpzWU52STcvZDNidmlRMGduL0dYY09CQ3ZtbkMxL3ozdTAvcHVUQ0lJRyt1dWtCYnZFVzBnQ3pVbQozaG5pVWMyU003NzljTjY5dlNMbmQxQ3hJNW03VnN3Vkk5cFlrNGt5VHp1UW1zNzdLRS84OFk2NkVOSWNNTy9OCnFXQlRXTm9LSjRYN2FNRWZ3OTE4TkZIV25XbFZaOEo2QXRLL21GMWUvbW9IWXNXVDR4QkJROUR1cXdFWVBxMHAKNExMeUNNMDhQMjUzT21rSVNjOGI4M3lSZDA0RlFwMXNpUGhyNGtoTDlYd0swaG9XakNuRE4rUXJwVm5QQ0ZlWgoyeUNQOWcxRkZzR2xvRHNOL3ZQNjdYcklTUTN3VG8zb251ZFV1bzlGUWNQMXpMdEtVZ1JvYURxS3FwTkhFdVZFCjJyeEFlazJBbnM1L3drditIaGN0M3dMZ3Q1elJlM0cwSFMxc0NUelh4amZveGc5TUR0TTZGRzFlTDJjQ0F3RUEKQVFLQ0FnQndVWDVVQWZ0ODl5QlVTSGJoYWhIM2hXR09HbDBKbGJST1Z0TU1GQzFCb3I4WlZIaHFQS0hsNHJNeAoyYVRVKzVSS2UxSEFWaG9pNElaYndqR0pYQ0lkVk1iNWFDTTFjQlJFU1RISEJjUHhodTNhZksxeXE2amxTUXlQCkdrNWZhS25iT0dCY1M0R084cm5UN242VmFFR2RFcUF0bTVzdk11bVUyOG8zRFZDUmtHT000SDNRalZub2ZpZGYKcndsNUtXQXpFbkdxalZUd0pKOTdKcitCQjNjcFhBZEs5M2I5VHZHSEhOeWVHc3RPMVpGLzB5ZEgxdlI2T0p4egprL3drM3JnV0RtTlE3VjFnRVpvQ0pvNUw1YUZKM00xVlBXVkh4Zno3UUU1ZTRZRTQ5aTBtUDVyVWhEWm03OFEwCnRTb2t6b0hlNHhzQ3R1RWk0UjJJMS9Oa1dnMTc0SDIrUktNcDh3NDIwN2RXQkJVSGwrSTJsL0M5REw2YnQyR3MKSlFTY0JnejR6a2wrbWZieTZJMFQrbUdpcVNXc3JGaW5VSXowM2RodHlLZ3pvQzRBRWNvZ21mU3Y4ZzRCeUNaMApZeVFNSmlZRnZ1NE9Dd2RmTUtMa1pDc05BeDUzUDBxWVpkVjJoeDNoV3hwM0FlMTVQKzZPOHFUTDhDWTliZitPClhOUkRWN0VEMDYrQWpBOVhiYXN3bjRuZXpIbDNKSFFqYVpjWmdqcVV1Nm5DRFd1UFBpRnhRd3IrakwxUGtCa0gKTittZXRoWHk2K1dQMFR0ZUxkNzhaMFJ0SUFycnF3cVF4V2JZU2V1R1JzeFk2OGdncGM0c3dFLzkvZWZWTGhQagpZZGNzM3lnOWZwL2Y0RkxzMTJnMzVQMG1OUVhPRVpybmY2ZGpoMjErUm1TRUdNaUpRUUtDQVFFQThNSXZyM0JlCkZBa3lWKy9BNmxBS2ovbldDRVJ6RXRHQ0E2Zk54dE1TN0svS3Nabmwyc3pLUWNXTHJxZnhQKzVRNTZjVDVPRk8KVTZoR0hsYUlmTmVRWWVWc3hCUG1kZXVGZUZOaG1GTUJ2Q25nbnpzb3o4YzhNeGhOQmpWdk9HdDhhUDlrTkVEMQpxOGhSN0YyR2dOTU5GdjdvRm50M3pQdFlYWmVKcHJIN3pEcEQrRnl6NHRsQ1B3blJKVzVFdHZqcm4rdlhNVEFQCkFKV0xnazNTR2Y1NTZmaHA1YlVjVFJBazJ0MU5FRDVUU2xGWFMzemZrTy9vanQ2ZFRZYUR3T0ttUDhMQ3NPZ0IKU1luTjQ4Tkcra0VYUkRsL1N4YUc2NEVSZi9CYVduYi81UFZLbXNyZFJ1cEh4ZEZycWVMYlJLc0c1ekJPeURCVgphVlZPMjY1UjE5TlRCd0tDQVFFQTIzN3dDMUUzdnlkUFpFMzZJWkNtUGFVbE53NExyYWI3SnBFRHU0M1RmQUlqCmNDeDVLY0RvR0wrRno4bUhKeldkL0M5YzlQbWZBYzdYUHNReHpXUTcwbWE0VDNDTXJBLy9ZM2JHVWFVNFVWM1gKakhxb1UvZVoxelZMR2NYTW9CVXdWbnY5cHUwVlJrWW5jd3c4Y0JaZUhqRW05OVMweGxUSUhISGJtclc2c2xicwp2aW5WeHJ2QUQza2VZd1Q5dER2bzNnSXp2dHV3QURZVTQrcmtuVndxcEdmMURyS28zOVdCUVNsVHluUGQzOXFtCk1kcWxPcWJvN3dhMDFRR20zN2Qzc1BxWTNLNmFGUnRkeThFMW5ET0VvcHJXQTBOMERydkJMYkxVM3NpYjRJRVgKMXRWd01NM2IvYVVkVGZPZldlNjlTa1N1WTNXWUVCMDBEZGF0aEx0SW9RS0NBUUVBa0p2U2tJbnB1QmNlQ2Z1VAo0Q2xiYnNjZGE3SFJmSWdpazVlQzNkMkNESEE2U3hxcEdSYlFsVmpXWVgyMlJqUWFuRW1haFd0ZTVKaTZKUmJNCnZFK3VCVjhNU1dtNmp6Rjc1WjRQakxLdTVCb3pOUEVQdmwxcEp6ZDliREZFTUpzL0NzSDdxZmNxbUplbHZWY2YKcHRrZGo2WmtPTHpJWkhMRHpOTnNkcGVKS2s0RTdYU2hCNngvUWVYZm5aL3gzZ1Q5WWYwQ01DVXhuYVExTzNzSwpxMXBTVjlwQm9SdDdlRDR1Sk5ldnBnWUplU1lLVE9rZ1Q2b0tBV1p0RFZleVkzUy9icVRJMUFGR1pLbEU1WDB4CmNMY1FCb2FTa3NOaEhxdFRtNGorZkQvbHk5d1poNGc2Q0pKSHNlWHJ5UXJkc1EwWkJGdmJ0aHB4OHVhdWl2elYKWTlFbW1RS0NBUUVBaS9rQ0dTVjgrR0NZSjIzMm9lcjlxSGdsS0Z2RHBNVEVpbzZWbzhoSTRsNzJ2SFVQKzBseQplVDNCbG9WOHM4dGthVXJHNjg0MzBVNVhRMGFZUDlPNHRtOGRBRVBVNFhEK095Nm1QN0N1SG0xS3BPSWZjQlNJCnZZM1Z5NlN3M2pGRTl4SHc2cjlyL3JtRU5NREwxZXJkc0VGR0NXdFNzTnVtRlVXaWRxR0hZbTArWWZLSnlrYzIKcm1kZHNtV2ZhSTEvN2Z2WGhkSFJCZ0YzQnZWblB0Wmt0eDA0VUZ3c2h6ay9TUStTeUp0bEZYajQzUGdDd0VscQpaK3VONi93MnI1bnZNU1JOMFFWamF5eGRmeTlDQWM5MHVNRW0wMFB6d2VXSHhwMnhWRFQzK280NFpwOE1BWU4xCjArVzByMTQ1ODM3a3BYVHhCS29jQThLcnpGdG5vaXBRb1FLQ0FRRUFtRGxoTHN1bXBpLzNPd3pRMStJcllUQ1YKSXUzNENlVEZRTnFOMXRPeGhweDMyQVh0ZnNoZ2kxNGgzeko4RDBSdFovc1lkbkxNNGxlTTR5V1kvWEp6dXRQOApPdFJkY0hYSDhFUFZWbFdtc2xBMmNKblQxSktjUk9EWW9PTnFUU2ZGeUpTSnFMSDIxNGJ4Szc0VStNWnVmNTBHCkFJbmduMU5wVjNxbEp5VWYyRnZYOExNV1p4eVNHSElBd1Vianl0NUJva0hOUTZ2R0s3RzVLV29ScWo3VUNRbTcKdVlDMnhHS0dicTJ1SnVHd09OS1E4czVKRU56Q0ovR1R5cG9veFNPQ0VaYmxmeWhFUzVIcllXYktReVVJbXdvdAprckVsWEtkaEMxVm1tT3ZCZUFET1NyWjZ6MS96QTd5YUM1TlJLRjRhZWZtaTFqakxrU0NJWGU4bGY3b2V6QT09Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

kind: Secret

metadata:

creationTimestamp: null

name: nginx-certs

type: kubernetes.io/tls

[root@k8s-Master-01 ssl-nginx]#cat 03-configmap-nginx.yaml

apiVersion: v1

data:

myserver-gzip.cfg: |

gzip on;

gzip_comp_level 5;

gzip_proxied expired no-cache no-store private auth;

gzip_types text/plain text/css application/xml text/javascript;

myserver-status.cfg: |

location /nginx-status {

stub_status on;

access_log off;

}

myserver.conf: "server {\n listen 443 ssl;\n server_name www.shuhong.com;\n\n

\ ssl_certificate /etc/nginx/certs/tls.crt; \n ssl_certificate_key /etc/nginx/certs/tls.key;\n\n

\ ssl_session_timeout 5m;\n\n ssl_protocols TLSv1 TLSv1.1 TLSv1.2; \n\n ssl_ciphers

ECDHE-RSA-AES128-GCM-SHA256:HIGH:!aNULL:!MD5:!RC4:!DHE; \n ssl_prefer_server_ciphers

on;\n\n include /etc/nginx/conf.d/myserver-*.cfg;\n\n location / {\n root

/usr/share/nginx/html;\n }\n}\n\nserver {\n listen 80;\n server_name

www.shuhong.com; \n return 301 https://$host$request_uri; \n}\n"

kind: ConfigMap

metadata:

creationTimestamp: null

name: nginx-sslvhosts-confs

[root@k8s-Master-01 ssl-nginx]#kubectl apply -f 03-configmap-nginx.yaml

configmap/nginx-sslvhosts-confs created

[root@k8s-Master-01 ssl-nginx]#kubectl apply -f 02-secret-ssl.yaml

secret/nginx-certs created

[root@k8s-Master-01 ssl-nginx]#kubectl apply -f 01-ssl-nginx-pod.yaml

pod/ssl-nginx created

[root@k8s-Master-01 ssl-nginx]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb 1/1 Running 0 37m

ssl-nginx 1/1 Running 0 8s

wordpress 1/1 Running 0 27m

[root@k8s-Master-01 ssl-nginx]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mydb 1/1 Running 0 37m 192.168.204.5 k8s-node-03 <none> <none>

ssl-nginx 1/1 Running 0 17s 192.168.127.9 k8s-node-01 <none> <none>

wordpress 1/1 Running 0 28m 192.168.8.6 k8s-node-02 <none> <none>验证效果

[root@k8s-Master-01 ssl-nginx]#curl -I 192.168.127.9

HTTP/1.1 301 Moved Permanently

Server: nginx/1.23.2

Date: Fri, 11 Nov 2022 06:53:04 GMT

Content-Type: text/html

Content-Length: 169

Connection: keep-alive

Location: https://192.168.127.9/

[root@k8s-Master-01 ssl-nginx]#curl https://192.168.127.9 -k

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-Master-01 ssl-nginx]#openssl s_client -connect 192.168.127.9:443

CONNECTED(00000003)

Can't use SSL_get_servername

depth=1 C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

verify error:num=19:self signed certificate in certificate chain

verify return:1

depth=1 C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

verify return:1

depth=0 C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

verify return:1

---

Certificate chain

0 s:C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

i:C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

1 s:C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

i:C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

---

Server certificate

-----BEGIN CERTIFICATE-----

MIIGCTCCA/GgAwIBAgIUDJI3p1wI6/kNc3s9TOk3XJaYHB8wDQYJKoZIhvcNAQEN

BQAwcDELMAkGA1UEBhMCQ04xEDAOBgNVBAgMB0JlaWppbmcxEDAOBgNVBAcMB0Jl

aWppbmcxEDAOBgNVBAoMB2V4YW1wbGUxETAPBgNVBAsMCFBlcnNvbmFsMRgwFgYD

VQQDDA93d3cuc2h1aG9uZy5jb20wHhcNMjIxMTExMDYzNTA5WhcNMzIxMTA4MDYz

NTA5WjBwMQswCQYDVQQGEwJDTjEQMA4GA1UECAwHQmVpamluZzEQMA4GA1UEBwwH

QmVpamluZzEQMA4GA1UECgwHZXhhbXBsZTERMA8GA1UECwwIUGVyc29uYWwxGDAW

BgNVBAMMD3d3dy5zaHVob25nLmNvbTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCC

AgoCggIBAM5tgB8+6C3oXH3P/pPh1+FTfl7HL31NaV4/kuX4W014RubG1LU1Vahg

Q+sZtprxTJR9b2VVBo/CYiP4qGs148vt2ngK8gTrdVwNzHhKOX/m9G4lUQpTB897

sBVMqXfVgjxHCp9rHlXDg61FLKkaVUh149hep7W5wDRq2ObDFR69m77fEwoQ+YfO

x29B9ntfOfIic2Ag2RQIpBWNp+HIa7FPpICN/PnY1jX2BOOEJ/TOj1HILuMBWELj

cGtJrV/iHO/48hMk96k2JxEj/fqOxbk275xCNaLpDcVxKWG6dr7yG/VhXRtWn2Jy

ybGDbyO/3d274kNIJ/xl3DgQr5pwtf897tP6bkwiCBvrrpAW7xFtIAs1Jt4Z4lHN

kjO+/XDevb0i53dQsSOZu1bMFSPaWJOJMk87kJrO+yhP/PGOuhDSHDDvzalgU1ja

CieF+2jBH8PdfDRR1p1pVWfCegLSv5hdXv5qB2LFk+MQQUPQ7qsBGD6tKeCy8gjN

PD9udzppCEnPG/N8kXdOBUKdbIj4a+JIS/V8CtIaFowpwzfkK6VZzwhXmdsgj/YN

RRbBpaA7Df7z+u16yEkN8E6N6J7nVLqPRUHD9cy7SlIEaGg6iqqTRxLlRNq8QHpN

gJ7Of8JL/h4XLd8C4Lec0XtxtB0tbAk818Y36MYPTA7TOhRtXi9nAgMBAAGjgZow

gZcwHwYDVR0jBBgwFoAUphO2X680R7mJDFUAnYQP8LCLFDwwCQYDVR0TBAIwADAL

BgNVHQ8EBAMCBPAwEwYDVR0lBAwwCgYIKwYBBQUHAwEwRwYDVR0RBEAwPoIPd3d3

LnNodWhvbmcuY29tghZoYXJib3Iud3d3LnNodWhvbmcuY29tghN3d3cud3d3LnNo

dWhvbmcuY29tMA0GCSqGSIb3DQEBDQUAA4ICAQDInC446Tu7F/3b09sOWCWUEf1Z

EW64mW0g+nJBFKGHNCakPITryvg+6MLgXRwZ+ZpGJistFUBBx6Y3fBn3okMm9S90

/aZp6ZZjm1HSfCBVfW2hLBGTKRCb8ca/vV8my/4MHcs22sAJcaAUYffxGI8qFT9g

n4eGeC3J1B/IkOH66rAoeAyM2tFcv0RajJGXeZdm5OR2vFbxo7HmPnPMkD4b13O+

nziUmpciYzyvRm9w+7UhYojMLsxcRXU5UgDRUvr3NgOtCiZMkEr+jrjlx3mA3to/

+NadPTnpUL9/k4XCc0JUSIzH+dyFqM4M0/daRTyrXmP4dxhch9ujxGSHlxYmtf1/

TjUtHiPIm0kvOlLnqAG/gKhFNw7nVCjLLPa3wTZ8JUY+CnZybw5akoJnegGn4h3j

t7Vh5GIxfWCIt1X0kjj6ZCS0g3DBTC+FKie5WDFt4pIBQS9CxfezTZpCv46BpTU1

7R8Z2M7Du/iHZfvMRN+e+Gh+g26YrJty1VZ+VyJ+Ft8jrLjGW+pA3KTd1jj8Cn1i

O9aimMAFH5Aa1eJFr/bDx3Wa7PMNIhiD4qXQtyCiNOBgd3FZ5GaceTa8vBYhhnKK

Go4DVKik30fBraOY4jgPChEXf9OWOyB9t9VetwAd1JzMsOIiyfe+J1cfoKeqZOoy

sCU8rwZUgM4LwCbZ4g==

-----END CERTIFICATE-----

subject=C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

issuer=C = CN, ST = Beijing, L = Beijing, O = example, OU = Personal, CN = www.shuhong.com

---

No client certificate CA names sent

Peer signing digest: SHA256

Peer signature type: RSA-PSS

Server Temp Key: X25519, 253 bits

---

SSL handshake has read 3926 bytes and written 376 bytes

Verification error: self signed certificate in certificate chain

---

New, TLSv1.2, Cipher is ECDHE-RSA-AES128-GCM-SHA256

Server public key is 4096 bit

Secure Renegotiation IS supported

Compression: NONE

Expansion: NONE

No ALPN negotiated

SSL-Session:

Protocol : TLSv1.2

Cipher : ECDHE-RSA-AES128-GCM-SHA256

Session-ID: 65C8A7BC079BB6946D246D71534F8F7AD21854F483CDC7279153AAAD133AC7AD

Session-ID-ctx:

Master-Key: 8DFB31B0AC527A96584990042CF90F6EAB70EED61F5A27BDC3668F8C590D290601F7E94A2D76FCCC46C9FA8B3BAE2145

PSK identity: None

PSK identity hint: None

SRP username: None

TLS session ticket lifetime hint: 300 (seconds)

TLS session ticket:

0000 - d1 5e 0e 9f 72 f9 0e 4a-f5 2a 15 5d b7 3b 6d e0 .^..r..J.*.].;m.

0010 - 53 3a ba bb 8c 29 4c 81-92 67 12 f9 85 96 0c 36 S:...)L..g.....6

0020 - f9 d2 39 58 38 d5 86 b1-dd 06 eb 9c 18 c8 61 25 ..9X8.........a%

0030 - 9c 7b 54 77 ae 67 49 9c-f4 5b d8 ff f3 e7 e0 47 .{Tw.gI..[.....G

0040 - 2c a9 61 aa b8 b4 ca 54-65 7a 46 7c 77 6a 7f 79 ,.a....TezF|wj.y

0050 - e6 e0 f8 d5 f2 20 b0 79-37 cd 9d 51 0a 99 33 7c ..... .y7..Q..3|

0060 - c7 60 aa ed 95 2e d4 11-88 87 84 40 9a 79 79 14 .`.........@.yy.

0070 - 1d 7e d8 ef 53 15 20 35-5b 59 17 67 d8 ef 23 0d .~..S. 5[Y.g..#.

0080 - c6 cf 09 f3 13 21 2e e8-4b 59 9a d8 dd b9 d8 6c .....!..KY.....l

0090 - 2c 7e a4 8b 23 03 88 77-eb 29 71 98 b3 15 c6 10 ,~..#..w.)q.....

00a0 - 72 8b 80 67 14 7a 20 3c-82 58 11 04 b1 bd 4c c2 r..g.z <.X....L.

Start Time: 1668149915

Timeout : 7200 (sec)

Verify return code: 19 (self signed certificate in certificate chain)

Extended master secret: yes

---

nginx + php-fpm wordpress + mysql

mysql

php-fpm wordpress

[root@k8s-Master-01 php-fpm-wordpress]#kubectl create svc clusterip phpwp -n lnmp --tcp 9000:9000 --dry-run=client -o yaml > 01-service-phpwp.yaml

[root@k8s-Master-01 php-fpm-wordpress]#vim 01-service-phpwp.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: phpwp

name: phpwp

namespace: lnmp

spec:

ports:

- name: 9000-9000

port: 9000

protocol: TCP

targetPort: 9000

selector:

app: phpwp

type: ClusterIP

重新初始化集群将service模式修改为ipvs

[root@k8s-Master-01 lnmp]#cat ~/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

kind: InitConfiguration

localAPIEndpoint:

# 这里的地址即为初始化的控制平面第一个节点的IP地址;

advertiseAddress: 10.0.0.201

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

# 第一个控制平面节点的主机名称;

name: k8s-Master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

# 控制平面的接入端点,我们这里选择适配到kubeapi.magedu.com这一域名上;

controlPlaneEndpoint: "kubeapi.shuhong.com:6443"

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.25.3

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 192.168.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

# 用于配置kube-proxy上为Service指定的代理模式,默认为iptables;

mode: "ipvs"

[root@k8s-Master-01 ~]# kubeadm init --config kubeadm-config.yaml --upload-certs

[init] Using Kubernetes version: v1.25.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master-01 kubeapi.shuhong.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.201]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 26.101091 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

88933b924db0b819a6789ab790fb225f049259f4eb20d0b878d9f6d97c8faf3e

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: hixc6t.kn8t1rvkgwp44ysn

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token hixc6t.kn8t1rvkgwp44ysn \

--discovery-token-ca-cert-hash sha256:91b32c7e50dcf885d718837e8865b860375268231a89e462a006571002e75c69 \

--control-plane --certificate-key 88933b924db0b819a6789ab790fb225f049259f4eb20d0b878d9f6d97c8faf3e

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token hixc6t.kn8t1rvkgwp44ysn \

--discovery-token-ca-cert-hash sha256:91b32c7e50dcf885d718837e8865b860375268231a89e462a006571002e75c69

[root@k8s-Master-01 ~]#

[root@k8s-Master-01 ~]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

cp:是否覆盖'/root/.kube/config'? y

[root@k8s-Master-01 ~]#cd /data/

[root@k8s-Master-01 data]#kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

#主节点加入集群

kubeadm join kubeapi.shuhong.com:6443 --token hixc6t.kn8t1rvkgwp44ysn --discovery-token-ca-cert-hash sha256:91b32c7e50dcf885d718837e8865b860375268231a89e462a006571002e75c69 --control-plane --certificate-key 88933b924db0b819a6789ab790fb225f049259f4eb20d0b878d9f6d97c8faf3e --cri-socket unix:///run/cri-dockerd.sock

#从节点加入集群

kubeadm join kubeapi.shuhong.com:6443 --token hixc6t.kn8t1rvkgwp44ysn --discovery-token-ca-cert-hash sha256:91b32c7e50dcf885d718837e8865b860375268231a89e462a006571002e75c69 --cri-socket unix:///run/cri-dockerd.sock

#验证是否为ipvs模式

[root@k8s-Master-01 lnmp]#kubectl get cm -n kube-system kube-proxy -o yaml

apiVersion: v1

data:

config.conf: |-

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: 192.168.0.0/16

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: ipvs

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: ""

rootHnsEndpointName: ""

sourceVip: ""

kubeconfig.conf: |-

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://kubeapi.shuhong.com:6443

name: default

contexts:

- context:

cluster: default

namespace: default

user: default

name: default

current-context: default

users:

- name: default

user:

tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

kind: ConfigMap

metadata:

creationTimestamp: "2022-11-13T01:36:14Z"

labels:

app: kube-proxy

name: kube-proxy

namespace: kube-system

resourceVersion: "282"

uid: 3ea05e1d-402f-409a-a006-7caa61b63b05

[root@k8s-Master-01 lnmp]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 10.0.0.201:6443 Masq 1 7 0

-> 10.0.0.202:6443 Masq 1 0 0

-> 10.0.0.203:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 192.168.94.1:53 Masq 1 0 0

-> 192.168.94.2:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 192.168.94.1:9153 Masq 1 0 0

-> 192.168.94.2:9153 Masq 1 0 0

TCP 10.103.63.245:3306 rr

-> 192.168.204.1:3306 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 192.168.94.1:53 Masq 1 0 0

-> 192.168.94.2:53 Masq 1 0 0 Pod/Wordpress-APACHE

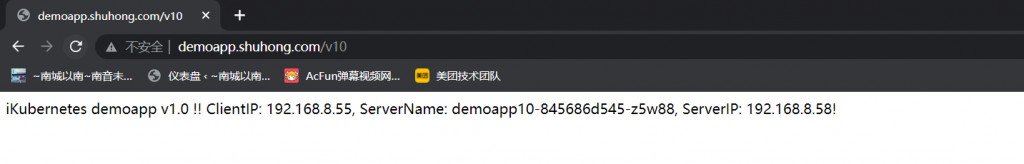

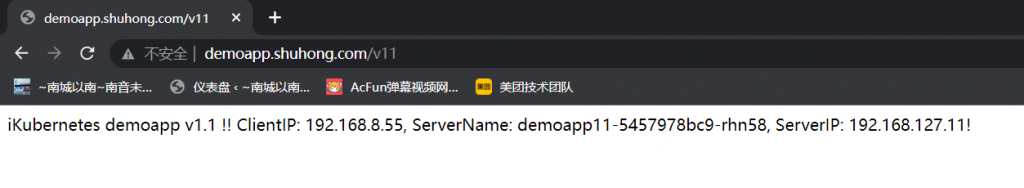

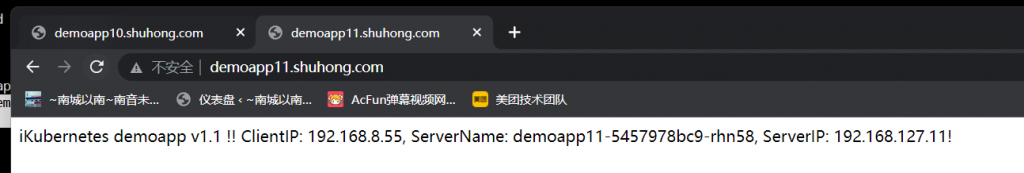

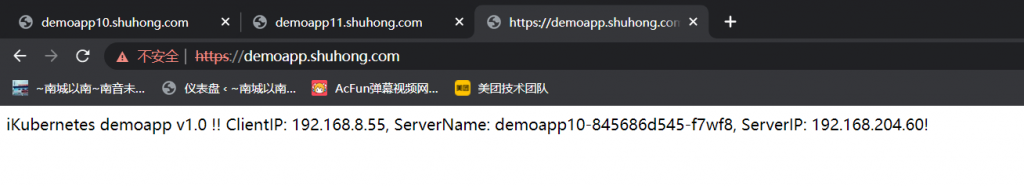

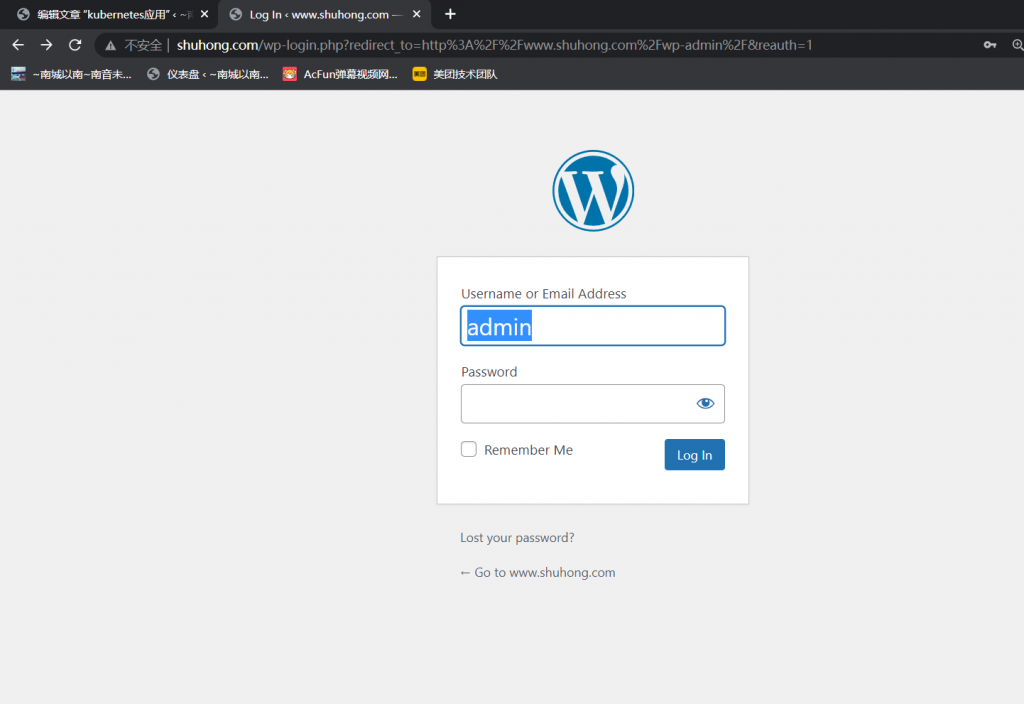

部署在Kubernetes上,Service使用ExternalIP接入; MySQL要部署在集群外部,Wordpress基于Kubernetes Service名称访问MySQL;

[root@rocky8 ~]#mysql -p123456

mysql> create database wpdb ;

Query OK, 1 row affected (0.02 sec)

mysql> create user wpuser@'%' identified by '123456';

Query OK, 0 rows affected (0.01 sec)

mysql> grant all on wpdb.* to wpuser@'%';

Query OK, 0 rows affected (0.02 sec)

[root@k8s-Master-01 test]#cat mysql-service.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: mysql-external

namespace: default

subsets:

- addresses:

- ip: 10.0.0.151

ports:

- name: mysql

port: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: mysql-external

namespace: default

spec:

type: ClusterIP

clusterIP: None

ports:

- name: mydb

port: 3306

targetPort: 3306

protocol: TCP

[root@k8s-Master-01 test]#kubectl apply -f mysql-service.yaml

[root@k8s-Master-01 test]#vim wordpress/01-service-wordpress.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: wordpress

name: wordpress

spec:

ports:

- name: 80-80

port: 80

protocol: TCP

targetPort: 80

externalIPs:

- 10.0.0.220

selector:

app: wordpress

type: NodePort

[root@k8s-Master-01 test]#cat wordpress/02-pod-wordpress.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:6.1-apache

env:

- name: WORDPRESS_DB_HOST

value: mysql-external

- name: WORDPRESS_DB_NAME

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.name

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.name

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.pass

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/www/html

name: nfs-pvc-wp

volumes:

- name: nfs-pvc-wp

persistentVolumeClaim:

claimName: pvc-nfs-sc

[root@k8s-Master-01 test]#kubectl apply -f wordpress/

#验证

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

wordpress 1/1 Running 0 12m

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 125m

mysql-external ClusterIP None <none> 3306/TCP 17m

wordpress NodePort 10.111.250.69 10.0.0.220 80:31401/TCP 13m

Pod/Wordpress-FPM和nginx

部署在Kubernetes上,Nginx Service使用ExternalIP接入,并反代给Wordpress;MySQL要部署在集群外部,Wordpress基于Kubernetes SErvice名称访问MySQL;

Pod/Tomcat-jpress和Nginx

部署在Kubernetes上,Nginx Service使用ExternalIP接入,并反代给tomcat;MySQL要部署在集群外部,jpress基于Kubernetes SErvice名称访问MySQL;

[root@k8s-Master-01 test]#cat jpress/01-service-jpress.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: jpress

name: jpress

spec:

ports:

- name: 8080-8080

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: jpress

type: ClusterIP

[root@k8s-Master-01 test]#cat jpress/pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: jpress

namespace: default

labels:

app: jpress

spec:

containers:

- name: jpress

image: registry.cn-shenzhen.aliyuncs.com/shuzihan/warehouse:jpress5.0.2v1.0

volumeMounts:

- mountPath: /data/website/ROOT

name: nfs-pvc-jp

volumes:

- name: nfs-pvc-jp

persistentVolumeClaim:

claimName: pvc-nfs-sc-jp

[root@k8s-Master-01 test]#cat jpress/sc/01-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi-jpress

provisioner: nfs.csi.k8s.io

parameters:

#server: nfs-server.default.svc.cluster.local

server: nfs-server.nfs.svc.cluster.local

share: /

#reclaimPolicy: Delete

reclaimPolicy: Retain

volumeBindingMode: Immediate

mountOptions:

- hard

- nfsvers=4.1

[root@k8s-Master-01 test]#cat jpress/sc/02-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-sc-jp

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: nfs-csi-jpress

[root@k8s-Master-01 test]#cat nginx-jpress/01-service-jpress.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nginx-jpress

name: nginx-jpress

spec:

ports:

- name: 8080-8080

port: 8080

protocol: TCP

targetPort: 8080

externalIPs:

- 10.0.0.220

selector:

app: nginx-jpress

type: NodePort

[root@k8s-Master-01 test]#cat nginx-jpress/02-nginx-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-jpress

namespace: default

labels:

app: nginx-jpress

spec:

containers:

- image: nginx:alpine

name: nginxserver

volumeMounts:

- name: nginxconf

mountPath: /etc/nginx/conf.d/

readOnly: true

- mountPath: /data/www/ROOT

name: jp

volumes:

- name: nginxconf

configMap:

name: nginx-jpress

optional: false

- name: jp

persistentVolumeClaim:

claimName: pvc-nfs-sc-jp

[root@k8s-Master-01 test]#cat nginx-jpress/03-configmap-nginx-jpress.yaml

apiVersion: v1

data:

myserver-status.cfg: |

location /nginx-status {

stub_status on;

access_log off;

}

myserver.conf: |

upstream tomcat {

server jpress:8080;

}

server {

listen 8080;

server_name jp.shuhong.com;

location / {

proxy_pass http://tomcat;

proxy_set_header Host $http_host;

}

}

kind: ConfigMap

metadata:

creationTimestamp: null

name: nginx-jpress

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jpress ClusterIP 10.105.254.92 <none> 8080/TCP 114m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h12m

mysql-external ClusterIP None <none> 3306/TCP 144m

nginx-jpress NodePort 10.109.235.64 10.0.0.220 8080:30674/TCP 90m

wordpress NodePort 10.111.250.69 10.0.0.220 80:31401/TCP 140m

[root@k8s-Master-01 test]#kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-17b3a376-521a-4e57-8449-f3393b1eaec9 10Gi RWX Retain Bound default/pvc-nfs-sc-jp nfs-csi-jpress 33m

pvc-1b60a273-4298-438e-a729-b63bebc74abe 10Gi RWX Retain Bound lnmp/pvc-wp nfs-csi-wp 3h36m

pvc-c4020aca-fc9e-45d9-8c3d-ef6dffc76ba0 10Gi RWX Retain Bound default/pvc-nfs-sc nfs-csi 147m

[root@k8s-Master-01 test]#kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs-sc Bound pvc-c4020aca-fc9e-45d9-8c3d-ef6dffc76ba0 10Gi RWX nfs-csi 147m

pvc-nfs-sc-jp Bound pvc-17b3a376-521a-4e57-8449-f3393b1eaec9 10Gi RWX nfs-csi-jpress 34m

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

jpress 1/1 Running 0 2m47s

nginx-jpress 1/1 Running 0 2m42s

wordpress 1/1 Running 0 141mDeployment:wordpress+apache

[root@k8s-Master-01 wordpress]#cat 03-deployment-wordpress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

replicas: 1

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:6.1-apache

env:

- name: WORDPRESS_DB_HOST

value: mysql-external

- name: WORDPRESS_DB_NAME

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.name

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.name

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.pass

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# securityContext:

# runAsUser: 999

volumeMounts:

- mountPath: /var/www/html

name: nfs-pvc-wp

volumes:

- name: nfs-pvc-wp

persistentVolumeClaim:

claimName: pvc-nfs-sc

[root@k8s-Master-01 lnmp]#kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

wordpress 1/1 1 1 14m

[root@k8s-Master-01 lnmp]#kubectl get pods

NAME READY STATUS RESTARTS AGE

wordpress-97577cb54-ggc7x 1/1 Running 0 14m

Deployment:Wordpress-FPM和nginx

Deployment:Tomcat-jpress和Nginx

要求:把nginx或wordpress都做成多实例,测试滚动 更新过程,验证更新过程中,服务是否中断;并写出验证报告; MySQL,基于statefulset编排运行

[root@k8s-Master-01 statefulset]#cat 01-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi-statefulset

provisioner: nfs.csi.k8s.io

parameters:

#server: nfs-server.default.svc.cluster.local

server: nfs-server.nfs.svc.cluster.local

share: /

#reclaimPolicy: Delete

reclaimPolicy: Retain

volumeBindingMode: Immediate

mountOptions:

- hard

- nfsvers=4.1

[root@k8s-Master-01 statefulset]#vim statefulset-mysql.yaml

apiVersion: v1

kind: Service

metadata:

name: statefulset-mysql

namespace: default

spec:

clusterIP: None

ports:

- port: 3306

selector:

app: statefulset-mysql

controller: sts

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: sts

spec:

serviceName: demoapp-sts

replicas: 2

selector:

matchLabels:

app: statefulset-mysql

controller: sts

template:

metadata:

labels:

app: statefulset-mysql

controller: sts

spec:

containers:

- name: mysql-sts

image: mysql:8.0

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: root.pass

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.name

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.name

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: db.user.pass

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: appdata

mountPath: /var/lib/mysql

volumeClaimTemplates:

- metadata:

name: appdata

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: nfs-csi-statefulset

resources:

requests:

storage: 2Gi

[root@k8s-Master-01 statefulset]#kubectl get pods

NAME READY STATUS RESTARTS AGE

sts-0 1/1 Running 0 2m44s

sts-1 1/1 Running 0 27s

wordpress-97577cb54-ggc7x 1/1 Running 0 98m

[root@k8s-Master-01 statefulset]#kubectl get statefulsets

NAME READY AGE

sts 2/2 3m44s

[root@k8s-Master-01 statefulset]#kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

appdata-sts-0 Bound pvc-ff0e971f-4e85-420b-a436-3c70ed8440e0 2Gi RWO nfs-csi-statefulset 7m33s

appdata-sts-1 Bound pvc-7476fdf4-c0b7-4faf-9908-09a588177241 2Gi RWO nfs-csi-statefulset 6m34s

pvc-nfs-sc Bound pvc-c4020aca-fc9e-45d9-8c3d-ef6dffc76ba0 10Gi RWX nfs-csi 47h

pvc-nfs-sc-jp Bound pvc-58c698dc-28a2-49e3-8271-4c4a8d62b8c8 10Gi RWX nfs-csi-jpress 38h

基于Strimz Opertor部署霆一个Kafka集群,并测试消息的收发

Strizi Operator

https://strimzi.io/quickstarts/

[root@k8s-Master-01 kafka]#kubectl create ns kafka

namespace/kafka created

[root@k8s-Master-01 kafka]#kubectl create -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka

clusterrole.rbac.authorization.k8s.io/strimzi-kafka-broker created

clusterrole.rbac.authorization.k8s.io/strimzi-cluster-operator-namespaced created

customresourcedefinition.apiextensions.k8s.io/kafkamirrormaker2s.kafka.strimzi.io created

rolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator-leader-election created

customresourcedefinition.apiextensions.k8s.io/kafkaconnectors.kafka.strimzi.io created

customresourcedefinition.apiextensions.k8s.io/kafkabridges.kafka.strimzi.io created

customresourcedefinition.apiextensions.k8s.io/kafkamirrormakers.kafka.strimzi.io created

clusterrolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator-kafka-broker-delegation created

rolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator-watched created

clusterrolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator created

customresourcedefinition.apiextensions.k8s.io/kafkatopics.kafka.strimzi.io created

customresourcedefinition.apiextensions.k8s.io/kafkaconnects.kafka.strimzi.io created

deployment.apps/strimzi-cluster-operator created

clusterrole.rbac.authorization.k8s.io/strimzi-cluster-operator-global created

customresourcedefinition.apiextensions.k8s.io/kafkarebalances.kafka.strimzi.io created

clusterrole.rbac.authorization.k8s.io/strimzi-cluster-operator-leader-election created

clusterrolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator-kafka-client-delegation created

customresourcedefinition.apiextensions.k8s.io/kafkausers.kafka.strimzi.io created

clusterrole.rbac.authorization.k8s.io/strimzi-cluster-operator-watched created

clusterrole.rbac.authorization.k8s.io/strimzi-kafka-client created

configmap/strimzi-cluster-operator created

clusterrole.rbac.authorization.k8s.io/strimzi-entity-operator created

rolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator-entity-operator-delegation created

rolebinding.rbac.authorization.k8s.io/strimzi-cluster-operator created

serviceaccount/strimzi-cluster-operator created

customresourcedefinition.apiextensions.k8s.io/strimzipodsets.core.strimzi.io created

customresourcedefinition.apiextensions.k8s.io/kafkas.kafka.strimzi.io created

[root@k8s-Master-01 kafka]#kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1

autoscaling/v2

autoscaling/v2beta2

batch/v1

certificates.k8s.io/v1

coordination.k8s.io/v1

core.strimzi.io/v1beta2

crd.projectcalico.org/v1

discovery.k8s.io/v1

events.k8s.io/v1

flowcontrol.apiserver.k8s.io/v1beta1

flowcontrol.apiserver.k8s.io/v1beta2

kafka.strimzi.io/v1alpha1

kafka.strimzi.io/v1beta1

kafka.strimzi.io/v1beta2

networking.k8s.io/v1

node.k8s.io/v1

policy/v1

rbac.authorization.k8s.io/v1

scheduling.k8s.io/v1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1

[root@k8s-Master-01 kafka]#kubectl api-resources --api-group=kafka.strimzi.io

NAME SHORTNAMES APIVERSION NAMESPACED KIND

kafkabridges kb kafka.strimzi.io/v1beta2 true KafkaBridge

kafkaconnectors kctr kafka.strimzi.io/v1beta2 true KafkaConnector

kafkaconnects kc kafka.strimzi.io/v1beta2 true KafkaConnect

kafkamirrormaker2s kmm2 kafka.strimzi.io/v1beta2 true KafkaMirrorMaker2

kafkamirrormakers kmm kafka.strimzi.io/v1beta2 true KafkaMirrorMaker

kafkarebalances kr kafka.strimzi.io/v1beta2 true KafkaRebalance

kafkas k kafka.strimzi.io/v1beta2 true Kafka

kafkatopics kt kafka.strimzi.io/v1beta2 true KafkaTopic

kafkausers ku kafka.strimzi.io/v1beta2 true KafkaUser

[root@k8s-Master-01 kafka]#kubectl get pods -n kafka

NAME READY STATUS RESTARTS AGE

strimzi-cluster-operator-56d64c8584-j9rs7 1/1 Running 0 2m32s

#下载创建集群的配置文件https://github.com/strimzi/strimzi-kafka-operator/blob/0.32.0/examples/kafka/kafka-ephemeral.yaml,此处没有使用持久存储

部署示例Kafka集群

kubectl apply -f https://strimzi.io/examples/latest/kafka/kafka-ephemeral.yaml -n kafka

或

kubectl apply -f https://raw.githubusercontent.com/strimzi/strimzi-kafka-operator/0.32.0/examples/kafka/kafka-ephemeral.yaml -n kafka

[root@k8s-Master-01 kafka]#kubectl apply -f https://strimzi.io/examples/latest/kafka/kafka-ephemeral.yaml -n kafka

kafka.kafka.strimzi.io/my-cluster created

#注意内存,否则节点会崩溃

[root@k8s-Master-01 kafka]#kubectl get pods -n kafka -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-cluster-entity-operator-9d45b6b89-nk6cd 3/3 Running 1 (2m42s ago) 5m37s 192.168.204.23 k8s-node-03 <none> <none>

my-cluster-kafka-0 1/1 Running 0 4m33s 192.168.204.25 k8s-node-03 <none> <none>

my-cluster-kafka-1 1/1 Running 0 4m33s 192.168.204.30 k8s-node-03 <none> <none>

my-cluster-kafka-2 1/1 Running 0 4m33s 192.168.204.26 k8s-node-03 <none> <none>

my-cluster-zookeeper-0 1/1 Running 0 4m33s 192.168.204.29 k8s-node-03 <none> <none>

my-cluster-zookeeper-1 1/1 Running 0 4m33s 192.168.204.27 k8s-node-03 <none> <none>

my-cluster-zookeeper-2 1/1 Running 0 4m32s 192.168.204.28 k8s-node-03 <none> <none>

strimzi-cluster-operator-56d64c8584-spqcw 1/1 Running 0 5m39s 192.168.204.22 k8s-node-03 <none> <none>

#测试

[root@k8s-Master-01 chapter8]# kubectl -n kafka run kafka-producer -ti --image=quay.io/strimzi/kafka:0.32.0-kafka-3.3.1 --rm=true --restart=Never -- bin/kafka-console-producer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic

If you don't see a command prompt, try pressing enter.

>set kafka

>hello my k8s

[root@k8s-Master-01 ~]# kubectl -n kafka run kafka-consumer -ti --image=quay.io/strimzi/kafka:0.32.0-kafka-3.3.1 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic --from-beginning

If you don't see a command prompt, try pressing enter.