域名及地址规划

kubeapi.shuhong.com

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03

初始化第一个控制平面

[root@k8s-Master-01 ~]#kubeadm config print init-defaults

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io

kind: ClusterConfiguration

kubernetesVersion: 1.25.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@k8s-Master-01 ~]#cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

kind: InitConfiguration

localAPIEndpoint:

# 这里的地址即为初始化的控制平面第一个节点的IP地址;

advertiseAddress: 10.0.0.201

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

# 第一个控制平面节点的主机名称;

name: k8s-Master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

# 控制平面的接入端点,我们这里选择适配到kubeapi.magedu.com这一域名上;

controlPlaneEndpoint: "kubeapi.shuhong.com:6443"

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.25.3

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

# 用于配置kube-proxy上为Service指定的代理模式,默认为iptables;

mode: "ipvs"

[root@k8s-Master-01 ~]#kubeadm init --config kubeadm-config.yaml --upload-certs

[init] Using Kubernetes version: v1.25.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master-01 kubeapi.shuhong.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.201]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 23.586074 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

875cab05ea86caa9c315d46d97fae5bfcf7c6a03868be1d4ba178018bdcd0857

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: eg8xpc.s01pm4gf07jhbsjj

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

--discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 \

--control-plane --certificate-key 875cab05ea86caa9c315d46d97fae5bfcf7c6a03868be1d4ba178018bdcd0857 --cri-socket unix:///run/cri-dockerd.sock

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

--discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 --cri-socket unix:///run/cri-dockerd.sock

部署flannel网络插件(19.2)

#每个节点操作

~# mkdir /opt/bin/

~# curl -L https://github.com/flannel-io/flannel/releases/download/v0.20.1/flanneld-amd64 -o /opt/bin/flanneld

~# chmod +x /opt/bin/flanneld

#主节点操作

kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

[root@k8s-Master-01 ~]#kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@k8s-Master-01 ~]#kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-2gvmj 1/1 Running 0 7s

添加其他节点

#此处注意域名kubeapi.shuhong.com先全部指向第一个控制节点

[root@k8s-Master-02 ~]# kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

> --discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 \

> --control-plane --certificate-key 875cab05ea86caa9c315d46d97fae5bfcf7c6a03868be1d4ba178018bdcd0857 --cri-socket unix:///run/cri-dockerd.sock

....

[root@k8s-Master-02 ~]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-Master-03 ~]#kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

> --discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 \

> --control-plane --certificate-key 875cab05ea86caa9c315d46d97fae5bfcf7c6a03868be1d4ba178018bdcd0857 --cri-socket unix:///run/cri-dockerd.sock

....

[root@k8s-Master-03 ~]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-Node-01 ~]#kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

> --discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 --cri-socket unix:///run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-Node-02 ~]#kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

> --discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 --cri-socket unix:///run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-Node-03 ~]#kubeadm join kubeapi.shuhong.com:6443 --token eg8xpc.s01pm4gf07jhbsjj \

> --discovery-token-ca-cert-hash sha256:f53946aa9f1522f839be14b739ce33cf09325331f1c017debe7206018d50f606 --cri-socket unix:///run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.在从节点部署nginx,所有节点域名解析指向自己实现高可用

#三个从节点均完成一下nginx配置

[root@k8s-Node-01 ~]#apt -y install nginx

[root@k8s-Node-01 ~]#cat /etc/nginx/conf.d/kubeapi.shuhong.com.conf

...

include /etc/nginx/conf.d/*.conf; #此处放在最外层

...

[root@k8s-Node-01 ~]#cat /etc/nginx/conf.d/kubeapi.shuhong.com.conf

stream {

upstream master {

server k8s-Master-01:6443 max_fails=2 fail_timeout=30s;

server k8s-Master-02:6443 max_fails=2 fail_timeout=30s;

server k8s-Master-03:6443 max_fails=2 fail_timeout=30s;

}

server {

listen 6443;

proxy_pass master;

}

}

#所有系节点将域名解析至自身ip

#查看节点是否正常

[root@k8s-Master-01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready control-plane 32m v1.25.3

k8s-master-02 Ready control-plane 14m v1.25.3

k8s-master-03 Ready control-plane 13m v1.25.3

k8s-node-01 Ready <none> 11m v1.25.3

k8s-node-02 Ready <none> 11m v1.25.3

k8s-node-03 Ready <none> 10m v1.25.3

#测试运行pods

[root@k8s-Master-01 ~]#kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=2

deployment.apps/demoapp created

[root@k8s-Master-01 ~]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-gcbfd 1/1 Running 0 2m27s 10.244.4.3 k8s-node-02 <none> <none>

demoapp-55c5f88dcb-hxmd6 1/1 Running 0 2m27s 10.244.3.3 k8s-node-01 <none> <none>

[root@k8s-Master-01 ~]#kubectl create svc nodeport demoapp --tcp 80:80

service/demoapp created

[root@k8s-Master-01 ~]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.110.192.110 <none> 80:32347/TCP 6s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 41m

[root@k8s-Master-01 ~]#kubectl get ep

NAME ENDPOINTS AGE

demoapp 10.244.3.3:80,10.244.4.3:80 12s

kubernetes 10.0.0.201:6443,10.0.0.202:6443,10.0.0.203:6443 41m部署nfs-csi插件

[root@k8s-Master-01 ~]#kubectl create namespace nfs

namespace/nfs created

[root@k8s-Master-01 ~]#kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/master/deploy/example/nfs-provisioner/nfs-server.yaml --namespace nfs

service/nfs-server created

deployment.apps/nfs-server created

[root@k8s-Master-01 ~]#kubectl get ns

NAME STATUS AGE

default Active 45m

kube-flannel Active 31m

kube-node-lease Active 45m

kube-public Active 45m

kube-system Active 45m

nfs Active 103s

[root@k8s-Master-01 ~]#kubectl get svc -n nfs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nfs-server ClusterIP 10.106.172.240 <none> 2049/TCP,111/UDP 51s

[root@k8s-Master-01 ~]#kubectl get pods -n nfs -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-server-5847b99d99-gw87t 1/1 Running 0 57s 10.244.5.4 k8s-node-03 <none> <none>

[root@k8s-Master-01 ~]#curl -skSL https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/v3.1.0/deploy/install-driver.sh | bash -s v3.1.0 -

Installing NFS CSI driver, version: v3.1.0 ...

serviceaccount/csi-nfs-controller-sa created

clusterrole.rbac.authorization.k8s.io/nfs-external-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-provisioner-binding created

csidriver.storage.k8s.io/nfs.csi.k8s.io created

deployment.apps/csi-nfs-controller created

daemonset.apps/csi-nfs-node created

NFS CSI driver installed successfully.

[root@k8s-Master-01 ~]#kubectl get pods -n nfs

NAME READY STATUS RESTARTS AGE

nfs-server-5847b99d99-gw87t 1/1 Running 0 6m2s

[root@k8s-Master-01 ~]#kubectl -n kube-system get pod -o wide -l 'app in (csi-nfs-node,csi-nfs-controller)'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-nfs-controller-65cf7d587-lfs9j 3/3 Running 0 67s 10.0.0.206 k8s-node-03 <none> <none>

csi-nfs-controller-65cf7d587-mqkwk 3/3 Running 0 67s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-d2wvq 3/3 Running 0 64s 10.0.0.206 k8s-node-03 <none> <none>

csi-nfs-node-g5d6b 3/3 Running 0 64s 10.0.0.205 k8s-node-02 <none> <none>

csi-nfs-node-kf22c 3/3 Running 0 64s 10.0.0.202 k8s-master-02 <none> <none>

csi-nfs-node-khkbl 3/3 Running 0 64s 10.0.0.204 k8s-node-01 <none> <none>

csi-nfs-node-qp5lg 3/3 Running 0 64s 10.0.0.203 k8s-master-03 <none> <none>

csi-nfs-node-vdsd5 3/3 Running 0 64s 10.0.0.201 k8s-master-01 <none> <none>

[root@k8s-Master-01 ~]#kubectl apply -f /data/test/sc-pvc/01-sc.yaml

storageclass.storage.k8s.io/nfs-csi created

[root@k8s-Master-01 ~]#cat /data/test/sc-pvc/01-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:

#server: nfs-server.default.svc.cluster.local

server: nfs-server.nfs.svc.cluster.local

share: /

#reclaimPolicy: Delete

reclaimPolicy: Retain

volumeBindingMode: Immediate

mountOptions:

- hard

- nfsvers=4.1部署ingress

#项目地址https://github.com/kubernetes/ingress-nginx

#此处因下载不到镜像所以使用的是自己阿里云仓库上传的镜像,需要下载yaml部署文件修改镜像路径

[root@k8s-Master-01 ~]#kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

[root@k8s-Master-01 ~]#kubectl get pods -n ingress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-rftjv 0/1 Completed 0 56s 10.244.5.5 k8s-node-03 <none> <none>

ingress-nginx-admission-patch-b67hf 0/1 Completed 2 56s 10.244.3.4 k8s-node-01 <none> <none>

ingress-nginx-controller-575f7cf88b-dj95w 1/1 Running 0 57s 10.244.4.4 k8s-node-02 <none> <none>

[root@k8s-Master-01 ~]#kubectl get svc -n ingress-nginx -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

ingress-nginx-controller LoadBalancer 10.109.127.77 <pending> 80:31192/TCP,443:30564/TCP 70s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

ingress-nginx-controller-admission ClusterIP 10.110.7.75 <none> 443/TCP 69s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

#此处因为集群没有lb组件,所以使用externalIPs作为访问入口

[root@k8s-Master-01 ~]#kubectl edit svc ingress-nginx-controller -n ingress-nginx

...

externalTrafficPolicy: Cluster

externalIPs:

- 10.0.0.220

...

[root@k8s-Master-01 ~]#kubectl get svc -n ingress-nginx -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

ingress-nginx-controller LoadBalancer 10.109.127.77 10.0.0.220 80:31192/TCP,443:30564/TCP 5m6s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

ingress-nginx-controller-admission ClusterIP 10.110.7.75 <none> 443/TCP 5m5s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

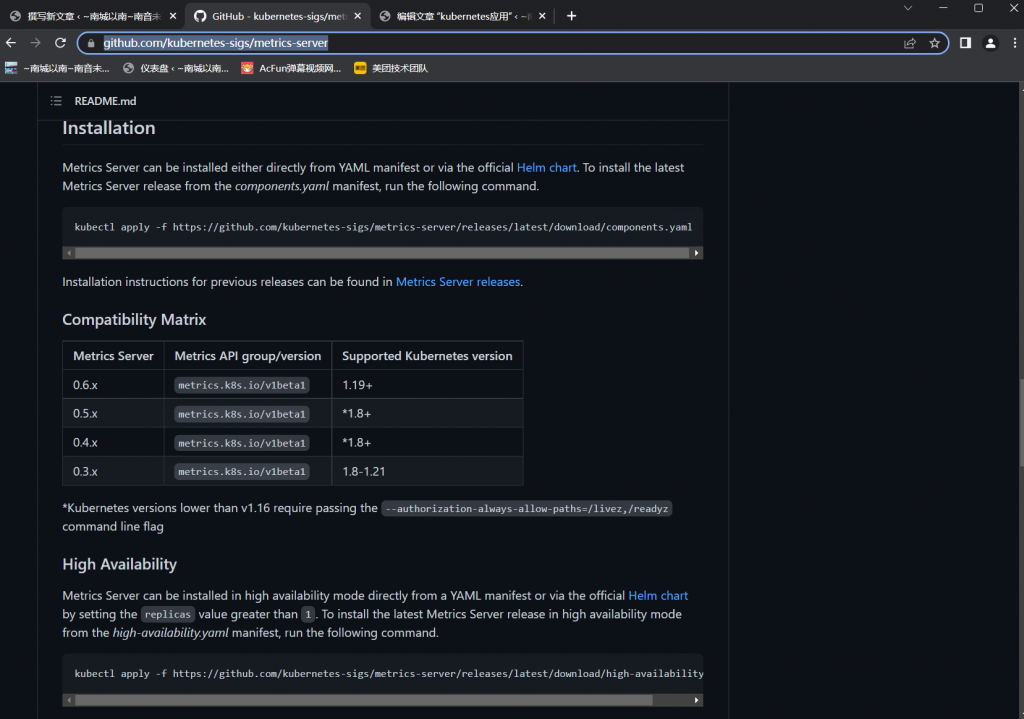

部署Metrics

#https://github.com/kubernetes-sigs/metrics-server

[root@k8s-Master-01 ~]#kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

#由于目前高可用方法无法完成节点域名解析,所以无法ready

[root@k8s-Master-01 ~]#kubectl get pods -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-847d45fd4f-69k6b 0/1 Running 0 36m

#此处需调整直接访问节点IP

[root@k8s-Master-01 ~]#curl -LO https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

0 0 0 0 0 0 0 0 --:--:-- 0:00:01 --:--:-- 0

100 4181 100 4181 0 0 2098 0 0:00:01 0:00:01 --:--:-- 18257

[root@k8s-Master-01 ~]#vim components.yaml

...

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

...

[root@k8s-Master-01 ~]#kubectl apply -f components.yaml

serviceaccount/metrics-server unchanged

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader unchanged

clusterrole.rbac.authorization.k8s.io/system:metrics-server unchanged

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader unchanged

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator unchanged

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server unchanged

service/metrics-server unchanged

deployment.apps/metrics-server configured

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io unchanged

[root@k8s-Master-01 ~]#kubectl get pods -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-659496f9b5-gmmjm 1/1 Running 0 42s

[root@k8s-Master-01 ~]#kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master-01 1544m 77% 1104Mi 59%

k8s-master-02 1153m 57% 808Mi 43%

k8s-master-03 1151m 57% 819Mi 44%

k8s-node-01 152m 3% 644Mi 16%

k8s-node-02 182m 4% 678Mi 17%

k8s-node-03 324m 8% 653Mi 17%

[root@k8s-Master-01 ~]#kubectl top pods

NAME CPU(cores) MEMORY(bytes)

demoapp-55c5f88dcb-gcbfd 1m 33Mi

demoapp-55c5f88dcb-hxmd6 1m 33Mi

test 0m 2Mi

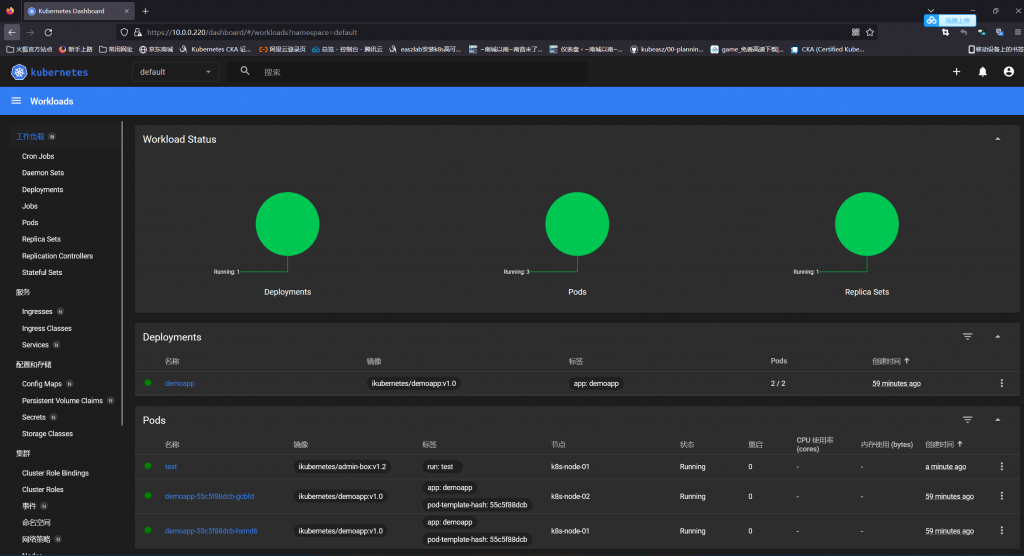

部署Dashboard

[root@k8s-Master-01 ~]#kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-Master-01 ~]#kubectl get ns

NAME STATUS AGE

default Active 71m

ingress-nginx Active 14m

kube-flannel Active 56m

kube-node-lease Active 71m

kube-public Active 71m

kube-system Active 71m

kubernetes-dashboard Active 25s

nfs Active 27m

[root@k8s-Master-01 ~]#kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-64bcc67c9c-5plvf 1/1 Running 0 45s

kubernetes-dashboard-5c8bd6b59-mjb9t 0/1 ContainerCreating 0 46s

[root@k8s-Master-01 ~]#kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.102.185.228 <none> 8000/TCP 51s

kubernetes-dashboard ClusterIP 10.100.178.49 <none> 443/TCP 52s

#配置ingress,添加访问入口

[root@k8s-Master-01 ~]#kubectl apply -f /data/ingress/ingress-kubernetes-dashboard.yaml

ingress.networking.k8s.io/dashboard created

[root@k8s-Master-01 ~]#vim /data/ingress/ingress-kubernetes-dashboard.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dashboard

annotations:

ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /$2

namespace: kubernetes-dashboard

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /dashboard(/|$)(.*)

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443

#host: dashboard.shuhong.com

[root@k8s-Master-01 ~]#kubectl get ingress -n kubernetes-dashboard

NAME CLASS HOSTS ADDRESS PORTS AGE

dashboard nginx * 10.0.0.220 80 101s

#生成登录token

[root@k8s-Master-01 ~]#kubectl create sa dashboarduser

serviceaccount/dashboarduser created

[root@k8s-Master-01 ~]#kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=default:dashboarduser

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s-Master-01 ~]#vim test.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: test

name: test

spec:

serviceAccountName: dashboarduser

containers:

- image: ikubernetes/admin-box:v1.2

name: test

command: ['/bin/sh','-c','sleep 9999']

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Never

status: {}

[root@k8s-Master-01 ~]#kubectl apply -f test.yaml

pod/test created

[root@k8s-Master-01 ~]#kubectl get pods

NAME READY STATUS RESTARTS AGE

demoapp-55c5f88dcb-gcbfd 1/1 Running 0 58m

demoapp-55c5f88dcb-hxmd6 1/1 Running 0 58m

test 1/1 Running 0 20s

[root@k8s-Master-01 ~]#kubectl exec -it test -- /bin/sh

root@test # cat /var/run/secrets/kubernetes.io/serviceaccount/token

eyJhbGciOiJSUzI1NiIsImtpZCI6InBFTFlPbERrTjctTndBUG53WGdVeFk2UjBGWjM0LUdROU4ya2UxSWJKNmMifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzAwMzY0NzI5LCJpYXQiOjE2Njg4Mjg3MjksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJkZWZhdWx0IiwicG9kIjp7Im5hbWUiOiJ0ZXN0IiwidWlkIjoiNDYwMzMxYmYtZGE4MC00NmIzLThlODUtNmY4NjE3NzVhY2Y2In0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkYXNoYm9hcmR1c2VyIiwidWlkIjoiODAyMjgzYjQtYzk4NS00ZmM4LTg2YzMtNjc2NzFhYzAxYWFmIn0sIndhcm5hZnRlciI6MTY2ODgzMjMzNn0sIm5iZiI6MTY2ODgyODcyOSwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6ZGFzaGJvYXJkdXNlciJ9.ZQurepCJ9no_CAWzYo4tJdgO1BIJZA83hRU4fVLA19mZ9uf_Nz9fNBUGJW3GDOGlg1OZaqmE_jCZ2BSvEFM6U6-S9dZZhde0PsB2vP0GbFtFkuihBmhAo2RhU7if5J6I9kYM8PHPXg5ha-7JTZcofarPRJG5llUYPshRIMtwMULqgPLgJicx1WNXNuRlfggylVYJPv3pFwseRLrTKdgG9vkUAdOHIZSdWkec-IPVskcWiotDKW-S1tu5nVQm2heylt2Lw9oyvRkUs-ig5k_ZQQevV9K1cbCdFNorz9G96YCoJAgSyOaNekoCaxrR61TjdJ_EF6EiAzIttiIhRIUjTg

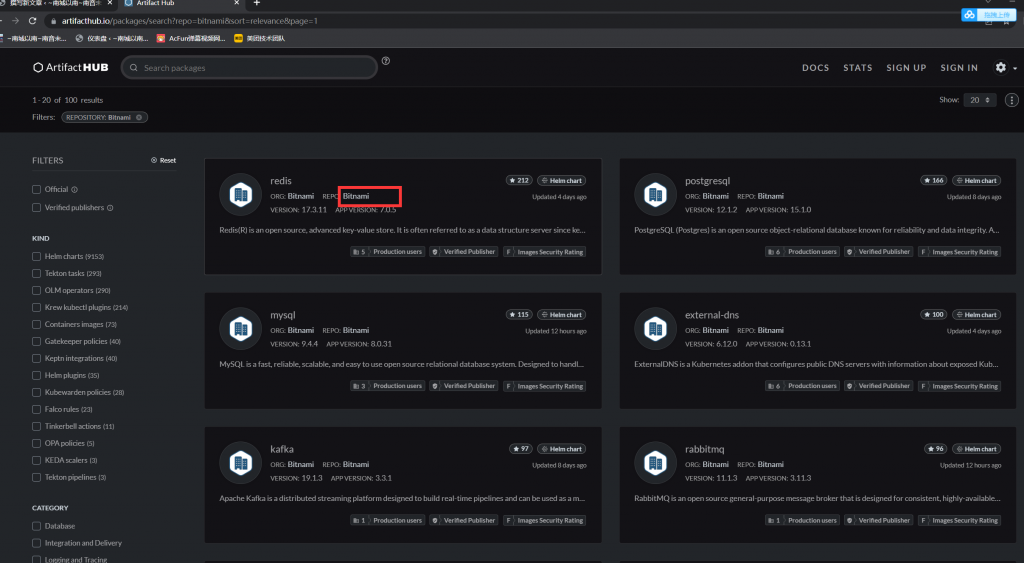

安装Helm

#Chart Hub: https://artifacthub.io/

#helm:https://github.com/helm/helm/releases/tag/v3.10.2

#下载对应包

[root@k8s-Master-01 ~]#tar xf helm-v3.10.2-linux-amd64.tar.gz linux-amd64/

[root@k8s-Master-01 ~]#cd linux-amd64/

[root@k8s-Master-01 linux-amd64]#ls

helm LICENSE README.md

[root@k8s-Master-01 linux-amd64]#cp helm /usr/local/bin/

[root@k8s-Master-01 linux-amd64]#helm --help

The Kubernetes package manager

Common actions for Helm:

- helm search: search for charts

- helm pull: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

Environment variables:

| Name | Description |

|------------------------------------|---------------------------------------------------------------------------------------------------|

| $HELM_CACHE_HOME | set an alternative location for storing cached files. |

| $HELM_CONFIG_HOME | set an alternative location for storing Helm configuration. |

| $HELM_DATA_HOME | set an alternative location for storing Helm data. |

| $HELM_DEBUG | indicate whether or not Helm is running in Debug mode |

| $HELM_DRIVER | set the backend storage driver. Values are: configmap, secret, memory, sql. |

| $HELM_DRIVER_SQL_CONNECTION_STRING | set the connection string the SQL storage driver should use. |

| $HELM_MAX_HISTORY | set the maximum number of helm release history. |

| $HELM_NAMESPACE | set the namespace used for the helm operations. |

| $HELM_NO_PLUGINS | disable plugins. Set HELM_NO_PLUGINS=1 to disable plugins. |

| $HELM_PLUGINS | set the path to the plugins directory |

| $HELM_REGISTRY_CONFIG | set the path to the registry config file. |

| $HELM_REPOSITORY_CACHE | set the path to the repository cache directory |

| $HELM_REPOSITORY_CONFIG | set the path to the repositories file. |

| $KUBECONFIG | set an alternative Kubernetes configuration file (default "~/.kube/config") |

| $HELM_KUBEAPISERVER | set the Kubernetes API Server Endpoint for authentication |

| $HELM_KUBECAFILE | set the Kubernetes certificate authority file. |

| $HELM_KUBEASGROUPS | set the Groups to use for impersonation using a comma-separated list. |

| $HELM_KUBEASUSER | set the Username to impersonate for the operation. |

| $HELM_KUBECONTEXT | set the name of the kubeconfig context. |

| $HELM_KUBETOKEN | set the Bearer KubeToken used for authentication. |

| $HELM_KUBEINSECURE_SKIP_TLS_VERIFY | indicate if the Kubernetes API server's certificate validation should be skipped (insecure) |

| $HELM_KUBETLS_SERVER_NAME | set the server name used to validate the Kubernetes API server certificate |

| $HELM_BURST_LIMIT | set the default burst limit in the case the server contains many CRDs (default 100, -1 to disable)|

Helm stores cache, configuration, and data based on the following configuration order:

- If a HELM_*_HOME environment variable is set, it will be used

- Otherwise, on systems supporting the XDG base directory specification, the XDG variables will be used

- When no other location is set a default location will be used based on the operating system

By default, the default directories depend on the Operating System. The defaults are listed below:

| Operating System | Cache Path | Configuration Path | Data Path |

|------------------|---------------------------|--------------------------------|-------------------------|

| Linux | $HOME/.cache/helm | $HOME/.config/helm | $HOME/.local/share/helm |

| macOS | $HOME/Library/Caches/helm | $HOME/Library/Preferences/helm | $HOME/Library/helm |

| Windows | %TEMP%\helm | %APPDATA%\helm | %APPDATA%\helm |

Usage:

helm [command]

Available Commands:

completion generate autocompletion scripts for the specified shell

create create a new chart with the given name

dependency manage a chart's dependencies

env helm client environment information

get download extended information of a named release

help Help about any command

history fetch release history

install install a chart

lint examine a chart for possible issues

list list releases

package package a chart directory into a chart archive

plugin install, list, or uninstall Helm plugins

pull download a chart from a repository and (optionally) unpack it in local directory

push push a chart to remote

registry login to or logout from a registry

repo add, list, remove, update, and index chart repositories

rollback roll back a release to a previous revision

search search for a keyword in charts

show show information of a chart

status display the status of the named release

template locally render templates

test run tests for a release

uninstall uninstall a release

upgrade upgrade a release

verify verify that a chart at the given path has been signed and is valid

version print the client version information

Flags:

--burst-limit int client-side default throttling limit (default 100)

--debug enable verbose output

-h, --help help for helm

--kube-apiserver string the address and the port for the Kubernetes API server

--kube-as-group stringArray group to impersonate for the operation, this flag can be repeated to specify multiple groups.

--kube-as-user string username to impersonate for the operation

--kube-ca-file string the certificate authority file for the Kubernetes API server connection

--kube-context string name of the kubeconfig context to use

--kube-insecure-skip-tls-verify if true, the Kubernetes API server's certificate will not be checked for validity. This will make your HTTPS connections insecure

--kube-tls-server-name string server name to use for Kubernetes API server certificate validation. If it is not provided, the hostname used to contact the server is used

--kube-token string bearer token used for authentication

--kubeconfig string path to the kubeconfig file

-n, --namespace string namespace scope for this request

--registry-config string path to the registry config file (default "/root/.config/helm/registry/config.json")

--repository-cache string path to the file containing cached repository indexes (default "/root/.cache/helm/repository")

--repository-config string path to the file containing repository names and URLs (default "/root/.config/helm/repositories.yaml")

Use "helm [command] --help" for more information about a command.

[root@k8s-Master-01 ~]#helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

[root@k8s-Master-01 ~]#helm repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami