删除集群

#从work节点开始每个节点都执行重置命令,执行后最好执行重启命令,清除集群的内核配置和IPtables,ipvs

kubeadm reset --cri-socket unix:///run/cri-dockerd.sock && rm -rf /etc/kubernetes/ /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni /etc/cni/net.d重建集群应用calico网络插件

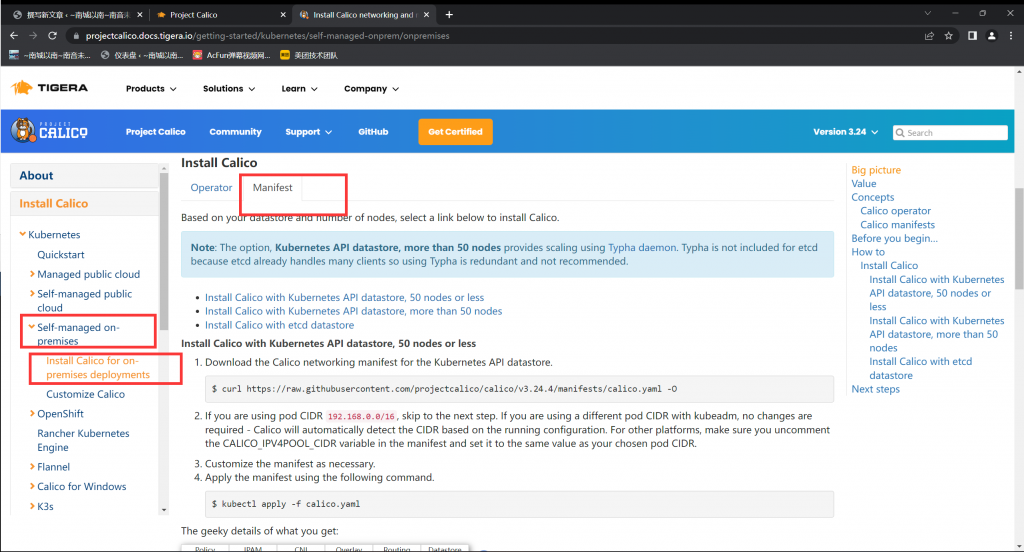

[root@k8s-Master-01 data]#curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.4/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 229k 100 229k 0 0 82206 0 0:00:02 0:00:02 --:--:-- 82177

[root@k8s-Master-01 data]#ll

总用量 248

drwxr-xr-x 4 root root 4096 11月 8 21:01 ./

drwxr-xr-x 19 root root 4096 11月 8 20:04 ../

-rw-r--r-- 1 root root 235192 11月 8 21:01 calico.yaml

drwxr-xr-x 3 root root 4096 11月 8 20:32 Kubernetes_Advanced_Practical/

drwxr-xr-x 9 root root 4096 11月 8 20:06 learning-k8s/

[root@k8s-Master-01 data]#kubeadm init --control-plane-endpoint="kubeapi.shuhong.com" --kubernetes-version=v1.25.3 --pod-network-cidr=192.168.0.0/16 --service-cidr=10.96.0.0/12 --token-ttl=0 --cri-socket unix:///run/cri-dockerd.sock --upload-certs --image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.25.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master-01 kubeapi.shuhong.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.201]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master-01 localhost] and IPs [10.0.0.201 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 12.020807 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ecd4b52e56aeda1b309f1bdc068fcccb86e78a3a18bf62d6177ac20579c59a87

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: m3yome.ec57pkfl2rbrz3c3

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token m3yome.ec57pkfl2rbrz3c3 \

--discovery-token-ca-cert-hash sha256:9dce5184d61a7b6a83c0806138a1a90343f529d49e2db841baf31b3945b827b0 \

--control-plane --certificate-key ecd4b52e56aeda1b309f1bdc068fcccb86e78a3a18bf62d6177ac20579c59a87

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.shuhong.com:6443 --token m3yome.ec57pkfl2rbrz3c3 \

--discovery-token-ca-cert-hash sha256:9dce5184d61a7b6a83c0806138a1a90343f529d49e2db841baf31b3945b827b0

[root@k8s-Master-01 data]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

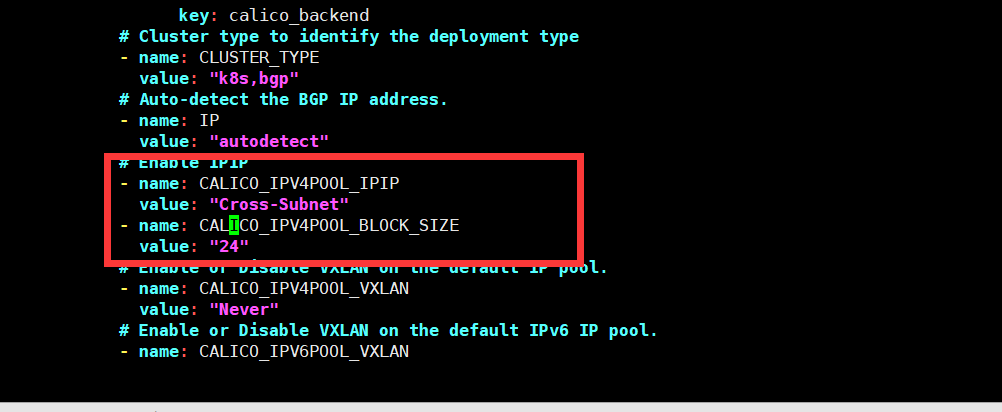

[root@k8s-Master-01 data]#vim calico.yaml

....

- name: CALICO_IPV4POOL_IPIP

value: "Cross-Subnet"

- name: CALICO_IPV4POOL_BLOCK_SIZE

value: "24"

....

[root@k8s-Master-01 data]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 NotReady control-plane 8m24s v1.25.3

[root@k8s-Master-01 data]#kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@k8s-Master-01 data]#kubectl get ns

NAME STATUS AGE

default Active 8m57s

kube-node-lease Active 8m59s

kube-public Active 8m59s

kube-system Active 9m

[root@k8s-Master-01 data]#kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-f79f7749d-7pqxz 0/1 ContainerCreating 0 99s

calico-node-wmdns 0/1 Init:2/3 0 99s

coredns-c676cc86f-77qwc 0/1 ContainerCreating 0 9m58s

coredns-c676cc86f-gcqlt 0/1 ContainerCreating 0 9m58s

etcd-k8s-master-01 1/1 Running 0 10m

kube-apiserver-k8s-master-01 1/1 Running 0 10m

kube-controller-manager-k8s-master-01 1/1 Running 0 10m

kube-proxy-f9rc5 1/1 Running 0 9m58s

kube-scheduler-k8s-master-01 1/1 Running 0 10m

#增加master节点

kubeadm join kubeapi.shuhong.com:6443 --token levsg3.xuj3v9wkgl9362us --discovery-token-ca-cert-hash sha256:7b214afe4dc7cfb13f74e8dcac9c76cbeea278800b19d21a2692389622b5470e --control-plane --certificate-key 1e3268422d15d44b87e35ef32ba8d4f5b912f59d25e06bedb3e8c10d961c4c34 --cri-socket unix:///run/cri-dockerd.sock

#增加node节点

kubeadm join kubeapi.shuhong.com:6443 --token levsg3.xuj3v9wkgl9362us --discovery-token-ca-cert-hash sha256:7b214afe4dc7cfb13f74e8dcac9c76cbeea278800b19d21a2692389622b5470e --cri-socket unix:///run/cri-dockerd.sock

[root@k8s-Master-01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready control-plane 9m33s v1.25.3

k8s-master-02 Ready control-plane 4m35s v1.25.3

k8s-master-03 Ready control-plane 4m27s v1.25.3

k8s-node-01 Ready <none> 2m13s v1.25.3

k8s-node-02 Ready <none> 105s v1.25.3

k8s-node-03 Ready <none> 103s v1.25.3

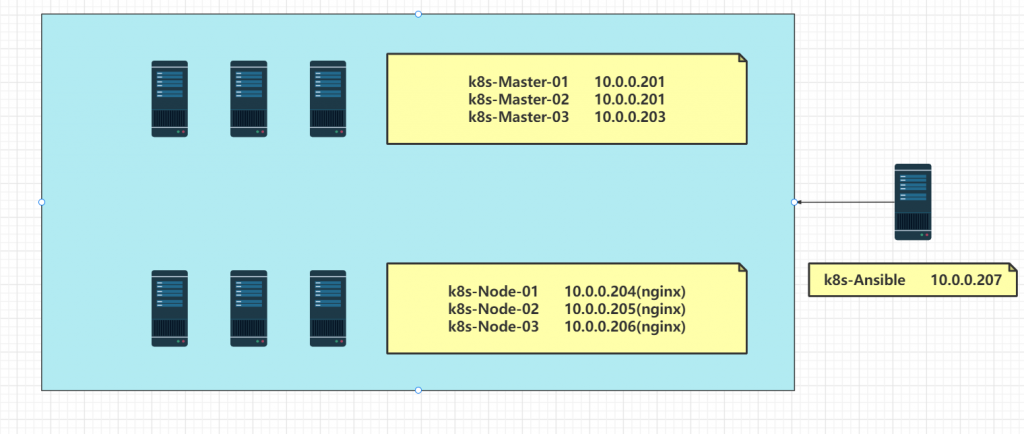

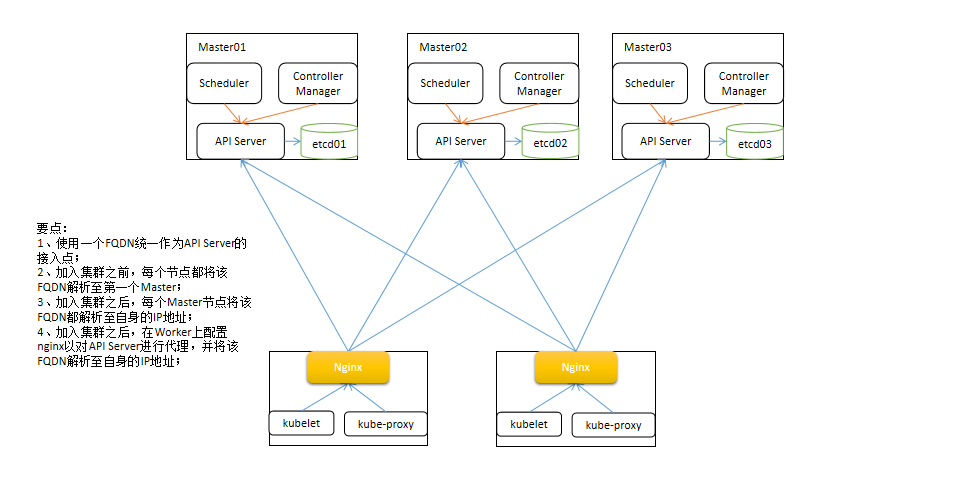

在node节点部署nginx,用四层负载均衡指向三个master节点,实现master的高可用

[root@k8s-Ansible ansible]#vim install_nginx.yml

---

- name: install nginx

hosts: Node

tasks:

- name: apt

apt:

name: nginx

state: present

- name: copy

copy:

src: kubeapi.shuhong.com.conf

dest: /etc/nginx/conf.d/kubeapi.shuhong.com.conf

- name: service

service:

name: nginx

state: restarted

enabled: yes

[root@k8s-Ansible ansible]#ansible-playbook install_nginx.yml

PLAY [install nginx] ****************************************************************************************************************************************************************************************************************

TASK [Gathering Facts] **************************************************************************************************************************************************************************************************************

ok: [10.0.0.205]

ok: [10.0.0.204]

ok: [10.0.0.206]

TASK [apt] **************************************************************************************************************************************************************************************************************************

ok: [10.0.0.206]

ok: [10.0.0.205]

ok: [10.0.0.204]

TASK [copy] *************************************************************************************************************************************************************************************************************************

ok: [10.0.0.206]

ok: [10.0.0.204]

ok: [10.0.0.205]

TASK [service] **********************************************************************************************************************************************************************************************************************

changed: [10.0.0.204]

changed: [10.0.0.206]

changed: [10.0.0.205]

PLAY RECAP **************************************************************************************************************************************************************************************************************************

10.0.0.204 : ok=4 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.205 : ok=4 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.0.0.206 : ok=4 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

#修改个结点的hosts均只想自己ip

[root@k8s-Master-01 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01 kubeapi.shuhong.com

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03

10.0.0.207 k8s-Ansible

[root@k8s-Master-02 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02 kubeapi.shuhong.com

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03

10.0.0.207 k8s-Ansible

[root@k8s-Master-03 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03 kubeapi.shuhong.com

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03

10.0.0.207 k8s-Ansible

[root@k8s-Node-01 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01 kubeapi.shuhong.com

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03

10.0.0.207 k8s-Ansible

[root@k8s-Node-02 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02 kubeapi.shuhong.com

10.0.0.206 k8s-Node-03

10.0.0.207 k8s-Ansible

[root@k8s-Node-03 ~]#vim /etc/hosts

10.0.0.201 k8s-Master-01

10.0.0.202 k8s-Master-02

10.0.0.203 k8s-Master-03

10.0.0.204 k8s-Node-01

10.0.0.205 k8s-Node-02

10.0.0.206 k8s-Node-03 kubeapi.shuhong.com

10.0.0.207 k8s-Ansible

#测试

[root@k8s-Node-03 ~]#curl kubeapi.shuhong.com

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

[root@k8s-Master-03 ~]#curl https://kubeapi.shuhong.com:6443 -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

#测试运行pod

[root@k8s-Master-01 ~]#cd /data/learning-k8s/wordpress/

[root@k8s-Master-01 wordpress]#ls

mysql mysql-ephemeral nginx README.md wordpress wordpress-apache-ephemeral

[root@k8s-Master-01 wordpress]#kubectl apply -f mysql-ephemeral

secret/mysql-user-pass created

service/mysql created

deployment.apps/mysql created

[root@k8s-Master-01 wordpress]#kubectl apply -f wordpress-apache-ephemeral

service/wordpress created

deployment.apps/wordpress created

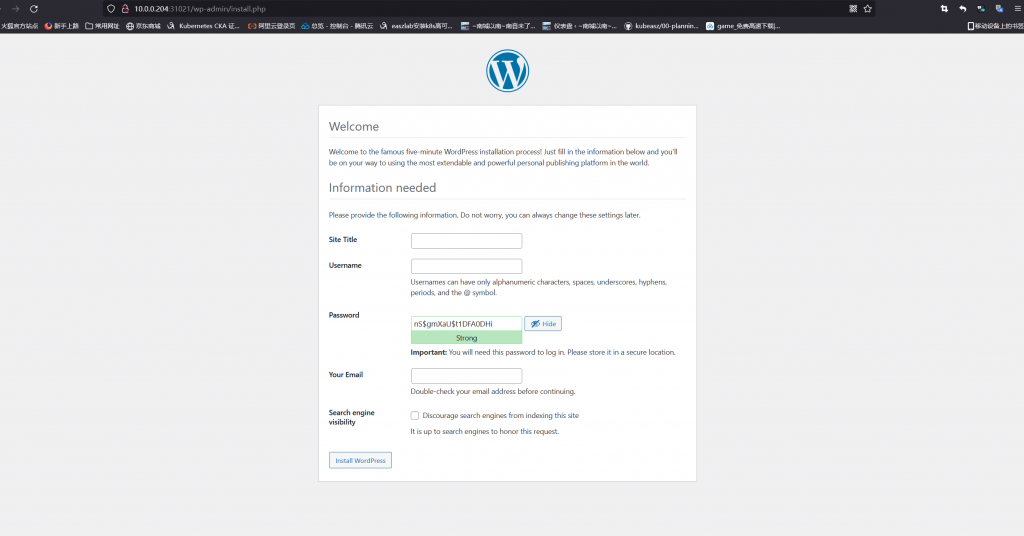

[root@k8s-Master-01 wordpress]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h

mysql ClusterIP 10.96.146.190 <none> 3306/TCP 5m3s

wordpress NodePort 10.106.224.35 <none> 80:31021/TCP 4m37s

[root@k8s-Master-01 wordpress]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-787575d954-sk8s8 1/1 Running 0 6m23s 192.168.204.1 k8s-node-03 <none> <none>

wordpress-6c854887c8-p8snx 1/1 Running 0 5m58s 192.168.127.1 k8s-node-01 <none> <none>

指令式命令:管理的目标的对象的操作和配置,通过命令行选项进行指定

创建demoapp

[root@k8s-Master-01 wordpress]#kubectl create ns demo

[root@k8s-Master-01 wordpress]#kubectl get ns

NAME STATUS AGE

default Active 12h

demo Active 6m5s

kube-node-lease Active 12h

kube-public Active 12h

kube-system Active 12h

[root@k8s-Master-01 wordpress]#kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=2 -n demo

deployment.apps/demoapp created

[root@k8s-Master-01 wordpress]#kubectl get pods -n demo

NAME READY STATUS RESTARTS AGE

demoapp-55c5f88dcb-drs6d 1/1 Running 0 112s

demoapp-55c5f88dcb-lfv6c 1/1 Running 0 112s

[root@k8s-Master-01 wordpress]#kubectl describe pods -n demo demoapp-55c5f88dcb-drs6d

Name: demoapp-55c5f88dcb-drs6d

Namespace: demo

Priority: 0

Service Account: default

Node: k8s-node-03/10.0.0.206

Start Time: Wed, 09 Nov 2022 09:33:17 +0800

Labels: app=demoapp

pod-template-hash=55c5f88dcb

Annotations: cni.projectcalico.org/containerID: 118e53a64d8e91af67172457a4fde12b7c82fe66cfa12659f34816369de07552

cni.projectcalico.org/podIP: 192.168.204.10/32

cni.projectcalico.org/podIPs: 192.168.204.10/32

Status: Running

IP: 192.168.204.10

IPs:

IP: 192.168.204.10

Controlled By: ReplicaSet/demoapp-55c5f88dcb

Containers:

demoapp:

Container ID: docker://dc6be64cb2766c1ebc4186331cf4a976acc89c5592e027238716d97b72cf0dcc

Image: ikubernetes/demoapp:v1.0

Image ID: docker-pullable://ikubernetes/demoapp@sha256:6698b205eb18fb0171398927f3a35fe27676c6bf5757ef57a35a4b055badf2c3

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 09 Nov 2022 09:33:54 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wdkpt (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-wdkpt:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 115s default-scheduler Successfully assigned demo/demoapp-55c5f88dcb-drs6d to k8s-node-03

Normal Pulling 111s kubelet Pulling image "ikubernetes/demoapp:v1.0"

Normal Pulled 80s kubelet Successfully pulled image "ikubernetes/demoapp:v1.0" in 31.012550745s

Normal Created 79s kubelet Created container demoapp

Normal Started 78s kubelet Started container demoapp

[root@k8s-Master-01 wordpress]#kubectl create svc nodeport demoapp --tcp=80:80 -n demo

service/demoapp created

[root@k8s-Master-01 wordpress]#kubectl get svc -n demo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.104.230.57 <none> 80:32462/TCP 8s

[root@k8s-Master-01 wordpress]#curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.201, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

[root@k8s-Node-03 ~]#curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.206, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

创建客户端访问

Service:

对于每个Service来说,CoreDNS会自动为其生成可用于解析的资源记录

A, PTR, SRV

service_name.namespace.svc.cluster.local A ClusterIP

[root@k8s-Master-02 ~]#kubectl get svc -n demo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.104.230.57 <none> 80:32462/TCP 17m

#创建一个专用的交互式测试客户端:

[root@k8s-Master-01 wordpress]#kubectl run client-$RANDOM --image=ikubernetes/admin-box:v1.2 --restart=Never -it --rm --command -- /bin/bash

If you don't see a command prompt, try pressing enter.

root@client-25427 /#

[root@k8s-Master-02 ~]#kubectl get pods

NAME READY STATUS RESTARTS AGE

client-25427 1/1 Running 0 7m13s

mysql-787575d954-sk8s8 1/1 Running 0 35m

wordpress-6c854887c8-p8snx 1/1 Running 0 34m

root@client-25427 /# curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

root@client-25427 /# curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

root@client-25427 /# curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl 10.104.230.57

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl demoapp.demo

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl demoapp.demo

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl demoapp.demo

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

root@client-25427 /# curl demoapp.demo

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# curl demoapp.demo

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

root@client-25427 /# host -t A demoapp.demo

demoapp.demo.svc.cluster.local has address 10.104.230.57

动态扩缩容

#动态扩缩容

root@client-25427 /# while true; do curl demoapp.demo;sleep 1 ;done

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

....

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-tb6c5, ServerIP: 192.168.127.4!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-tb6c5, ServerIP: 192.168.127.4!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

....

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-lfv6c, ServerIP: 192.168.8.9!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

...

[root@k8s-Master-02 ~]# kubectl scale deployment/demoapp --replicas=3 -n demo

deployment.apps/demoapp scaled

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-drs6d 1/1 Running 0 28m 192.168.204.10 k8s-node-03 <none> <none>

demoapp-55c5f88dcb-lfv6c 1/1 Running 0 28m 192.168.8.9 k8s-node-02 <none> <none>

demoapp-55c5f88dcb-tb6c5 1/1 Running 0 84s 192.168.127.4 k8s-node-01 <none> <none>

[root@k8s-Master-02 ~]#kubectl scale deployment/demoapp --replicas=2 -n demo

deployment.apps/demoapp scaled

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-drs6d 1/1 Running 0 29m 192.168.204.10 k8s-node-03 <none> <none>

demoapp-55c5f88dcb-lfv6c 1/1 Running 0 29m 192.168.8.9 k8s-node-02 <none> <none>滚动升级和回滚

#升级

kubectl set image (-f FILENAME | TYPE NAME) CONTAINER_NAME_1=CONTAINER_IMAGE_1 ... CONTAINER_NAME_N=CONTAINER_IMAGE_N

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-drs6d 1/1 Running 0 32m 192.168.204.10 k8s-node-03 <none> <none>

demoapp-55c5f88dcb-lfv6c 1/1 Running 0 32m 192.168.8.9 k8s-node-02 <none> <none>

[root@k8s-Master-02 ~]#kubectl describe pods -n demo demoapp-55c5f88dcb-drs6d

Name: demoapp-55c5f88dcb-drs6d

Namespace: demo

Priority: 0

Service Account: default

Node: k8s-node-03/10.0.0.206

Start Time: Wed, 09 Nov 2022 09:33:17 +0800

Labels: app=demoapp

pod-template-hash=55c5f88dcb

Annotations: cni.projectcalico.org/containerID: 118e53a64d8e91af67172457a4fde12b7c82fe66cfa12659f34816369de07552

cni.projectcalico.org/podIP: 192.168.204.10/32

cni.projectcalico.org/podIPs: 192.168.204.10/32

Status: Running

IP: 192.168.204.10

IPs:

IP: 192.168.204.10

Controlled By: ReplicaSet/demoapp-55c5f88dcb

Containers:

demoapp:

Container ID: docker://dc6be64cb2766c1ebc4186331cf4a976acc89c5592e027238716d97b72cf0dcc

Image: ikubernetes/demoapp:v1.0

Image ID: docker-pullable://ikubernetes/demoapp@sha256:6698b205eb18fb0171398927f3a35fe27676c6bf5757ef57a35a4b055badf2c3

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 09 Nov 2022 09:33:54 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wdkpt (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-wdkpt:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 33m default-scheduler Successfully assigned demo/demoapp-55c5f88dcb-drs6d to k8s-node-03

Normal Pulling 33m kubelet Pulling image "ikubernetes/demoapp:v1.0"

Normal Pulled 32m kubelet Successfully pulled image "ikubernetes/demoapp:v1.0" in 31.012550745s

Normal Created 32m kubelet Created container demoapp

Normal Started 32m kubelet Started container demoapp

[root@k8s-Master-02 ~]#kubectl set image deployment demoapp demoapp=ikubernetes/demoapp:v1.1 -n demo

deployment.apps/demoapp image updated

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-drs6d 1/1 Running 0 35m 192.168.204.10 k8s-node-03 <none> <none>

demoapp-55c5f88dcb-lfv6c 1/1 Running 0 35m 192.168.8.9 k8s-node-02 <none> <none>

demoapp-69f8964965-wfqjg 0/1 ContainerCreating 0 12s <none> k8s-node-01 <none> <none>

[root@k8s-Master-02 ~]#kubectl describe pods -n demo demoapp-69f8964965-wfqjg

Name: demoapp-69f8964965-wfqjg

Namespace: demo

Priority: 0

Service Account: default

Node: k8s-node-01/10.0.0.204

Start Time: Wed, 09 Nov 2022 10:08:06 +0800

Labels: app=demoapp

pod-template-hash=69f8964965

Annotations: cni.projectcalico.org/containerID: 742cc2c7f26301b4c93187199ae4f5ec4fca8bea1dcd1c621d19b61f1d7486ab

cni.projectcalico.org/podIP: 192.168.127.5/32

cni.projectcalico.org/podIPs: 192.168.127.5/32

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/demoapp-69f8964965

Containers:

demoapp:

Container ID:

Image: ikubernetes/demoapp:v1.1

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-4sxfq (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-4sxfq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 26s default-scheduler Successfully assigned demo/demoapp-69f8964965-wfqjg to k8s-node-01

Normal Pulling 25s kubelet Pulling image "ikubernetes/demoapp:v1.1"

root@client-25427 /# while true; do curl demoapp.demo;sleep 1 ;done

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-drs6d, ServerIP: 192.168.204.10!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-wfqjg, ServerIP: 192.168.127.5!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

iKubernetes demoapp v1.1 !! ClientIP: 192.168.8.10, ServerName: demoapp-69f8964965-r7tdd, ServerIP: 192.168.204.11!

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-69f8964965-r7tdd 1/1 Running 0 85s 192.168.204.11 k8s-node-03 <none> <none>

demoapp-69f8964965-wfqjg 1/1 Running 0 2m6s 192.168.127.5 k8s-node-01 <none> <none>

#回滚

kubectl rollout history (TYPE NAME | TYPE/NAME) [flags] [options]

[root@k8s-Master-02 ~]#kubectl rollout history deployment demoapp -n demo

deployment.apps/demoapp

REVISION CHANGE-CAUSE

1 <none>

2 <none>

kubectl rollout undo (TYPE NAME | TYPE/NAME) [flags] [options]

[root@k8s-Master-02 ~]#kubectl rollout undo deployment demoapp -n demo

deployment.apps/demoapp rolled back

[root@k8s-Master-02 ~]#kubectl rollout undo deployment demoapp -n demo

deployment.apps/demoapp rolled back

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-cjlhc 1/1 Running 0 32s 192.168.8.11 k8s-node-02 <none> <none>

demoapp-55c5f88dcb-qdh6v 1/1 Running 0 25s 192.168.127.6 k8s-node-01 <none> <none>

demoapp-69f8964965-r7tdd 1/1 Terminating 0 6m6s 192.168.204.11 k8s-node-03 <none> <none>

demoapp-69f8964965-wfqjg 1/1 Terminating 0 6m47s 192.168.127.5 k8s-node-01 <none> <none>

root@client-25427 /# while true; do curl demoapp.demo;sleep 1 ;done

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-cjlhc, ServerIP: 192.168.8.11!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.8.10, ServerName: demoapp-55c5f88dcb-qdh6v, ServerIP: 192.168.127.6!

[root@k8s-Master-02 ~]#kubectl get pods -n demo -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-cjlhc 1/1 Running 0 53s 192.168.8.11 k8s-node-02 <none> <none>

demoapp-55c5f88dcb-qdh6v 1/1 Running 0 46s 192.168.127.6 k8s-node-01 <none> <none>指令式对象配置:对象配置通过配置文件进行定义

#例如:

kubectl get -f nodes.yaml

kubectl delete -f nodes.yaml

[root@k8s-Master-01 wordpress]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-787575d954-sk8s8 1/1 Running 0 88m

wordpress-6c854887c8-p8snx 1/1 Running 0 87m

[root@k8s-Master-01 wordpress]#kubectl delete -f mysql-ephemeral/

secret "mysql-user-pass" deleted

service "mysql" deleted

deployment.apps "mysql" deleted

[root@k8s-Master-01 wordpress]#kubectl delete -f wordpress-apache-ephemeral/

service "wordpress" deleted

deployment.apps "wordpress" deleted

[root@k8s-Master-01 wordpress]#kubectl get pods

NAME READY STATUS RESTARTS AGE

wordpress-6c854887c8-p8snx 0/1 Terminating 0 88m

[root@k8s-Master-01 wordpress]#kubectl get pods

No resources found in default namespace.声明式对象配置:对象配置通过配置文件进行定义

手动编辑yaml文件部署wordpress

[root@k8s-Master-01 mysql]#kubectl create service clusterip mydb --tcp=3306:3306 --dry-run=client -o yaml > /data/test/mysql/01-service-mydb.yaml

[root@k8s-Master-01 mysql]#cat 01-service-mydb.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: mydb

name: mydb

spec:

ports:

- name: 3306-3306

port: 3306

protocol: TCP

targetPort: 3306

selector:

app: mydb

type: ClusterIP

status:

loadBalancer: {}

[root@k8s-Master-01 wordpress]#kubectl create service nodeport wordpress --tcp=80:80 --dry-run=client -o yaml > /data/test/wordpress/01-service-wordpress.yaml

[root@k8s-Master-01 wordpress]#cat 01-service-wordpress.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: wordpress

name: wordpress

spec:

ports:

- name: 80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: wordpress

type: NodePort

status:

loadBalancer: {}

[root@k8s-Master-01 test]#vim mysql/02-deploy-mydb.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mydb

namespace: default

labels:

app: mydb

spec:

replicas: 1

selector:

matchLabels:

app: mydb

template:

metadata:

labels:

app: mydb

spec:

containers:

- name: mysql

image: mysql:8.0

env:

- name: MYSQL_RANDOM_ROOT_PASSWORD

value: "1"

- name: MYSQL_DATABASE

value: wpdb

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: "123456"

[root@k8s-Master-01 test]#vim wordpress/02-deploy-wordpress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

replicas: 1

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:5.7

env:

- name: WORDPRESS_DB_HOST

value: mydb

- name: WORDPRESS_DB_NAME

value: wpdb

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: "123456"

[root@k8s-Master-01 test]#kubectl apply -f wordpress/

service/wordpress created

deployment.apps/wordpress created

[root@k8s-Master-01 test]#kubectl apply -f mysql/

service/mydb configured

deployment.apps/mydb created

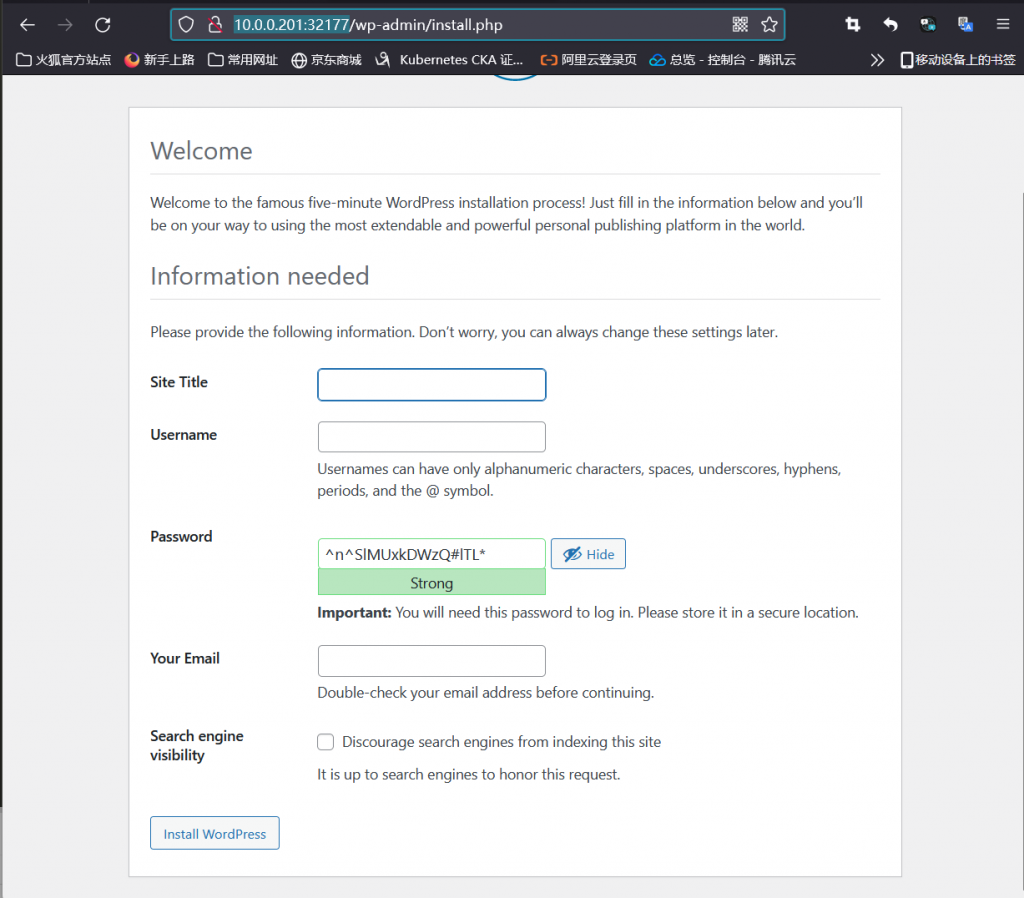

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13h

mydb ClusterIP 10.110.129.77 <none> 3306/TCP 20m

wordpress NodePort 10.109.147.168 <none> 80:32177/TCP 5m37s

[root@k8s-Master-01 test]#kubectl get pods

NAME READY STATUS RESTARTS AGE

mydb-57786547bd-hkzbn 1/1 Running 0 11m

wordpress-7c468fbc8d-9d784 1/1 Running 0 5m41s

配置健康探针

[root@k8s-Master-01 test]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mydb-5dccf4cbf7-2tmbw 1/1 Running 0 48m 192.168.204.29 k8s-node-03 <none> <none>

wordpress-579c794b44-rmctx 0/1 Running 0 65s 192.168.8.18 k8s-node-02 <none> <none>

[root@k8s-Master-01 test]#kubectl describe pods wordpress-579c794b44-rmctx

Name: wordpress-579c794b44-rmctx

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node-02/10.0.0.205

Start Time: Wed, 09 Nov 2022 15:56:46 +0800

Labels: app=wordpress

pod-template-hash=579c794b44

Annotations: cni.projectcalico.org/containerID: a73bf090a8b0bd6727552b00ea52ec9ff5115990aeef71492274328e9aa4fea7

cni.projectcalico.org/podIP: 192.168.8.18/32

cni.projectcalico.org/podIPs: 192.168.8.18/32

Status: Running

IP: 192.168.8.18

IPs:

IP: 192.168.8.18

Controlled By: ReplicaSet/wordpress-579c794b44

Containers:

wordpress:

Container ID: docker://c30b46ed34b0bbf06fd194f505c3cf383b6feeef68ba55c64e4f78c39171af72

Image: wordpress:5.7

Image ID: docker-pullable://wordpress@sha256:37be4c1afbce8025ebaca553b70d9ce5d7ce4861a351e4bea42c36ee966b27bb

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 09 Nov 2022 15:56:50 +0800

Ready: False

Restart Count: 0

Liveness: http-get http://:80/wp-admin/install.php delay=30s timeout=2s period=10s #success=1 #failure=3

Readiness: http-get http://:80/wp-login.php delay=60s timeout=2s period=10s #success=1 #failure=3

Startup: http-get http://:80/wp-admin/install.php delay=0s timeout=1s period=3s #success=1 #failure=3

Environment:

WORDPRESS_DB_HOST: mydb

WORDPRESS_DB_NAME: wpdb

WORDPRESS_DB_USER: wpuser

WORDPRESS_DB_PASSWORD: 123456

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-72v26 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-72v26:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 67s default-scheduler Successfully assigned default/wordpress-579c794b44-rmctx to k8s-node-02

Normal Pulled 63s kubelet Container image "wordpress:5.7" already present on machine

Normal Created 63s kubelet Created container wordpress

Normal Started 63s kubelet Started container wordpress

Warning Unhealthy 61s kubelet Startup probe failed: Get "http://192.168.8.18:80/wp-admin/install.php": dial tcp 192.168.8.18:80: connect: connection refused

Warning Unhealthy 57s kubelet Startup probe failed: Get "http://192.168.8.18:80/wp-admin/install.php": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

[root@k8s-Master-01 test]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mydb-5dccf4cbf7-2tmbw 1/1 Running 0 48m 192.168.204.29 k8s-node-03 <none> <none>

wordpress-579c794b44-rmctx 1/1 Running 0 73s 192.168.8.18 k8s-node-02 <none> <none>

[root@k8s-Master-01 test]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18h

mydb ClusterIP 10.107.249.52 <none> 3306/TCP 49m

wordpress NodePort 10.102.151.208 <none> 80:30817/TCP 86s添加资源需求和资源限制

[root@k8s-Master-01 test]#vim mysql/02-deploy-mydb.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mydb

namespace: default

labels:

app: mydb

spec:

replicas: 1

selector:

matchLabels:

app: mydb

template:

metadata:

labels:

app: mydb

spec:

containers:

- name: mysql

image: mysql:8.0

# ports:

# - name: tcp

# containerPort: 3306

# securityContext:

# capabilities:

# add:

# - NET_ADMIN

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: wpdb

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

# startupProbe:

# exec:

# command: ['/bin/sh','-c','[ "$(mysql -uroot -p123456 -e "show databases" > /dev/null && echo $?)" == "0" ] ']

# initialDelaySeconds: 0

# failureThreshold: 5

# periodSeconds: 3

# livenessProbe:

[root@k8s-Master-01 test]#vim wordpress/02-deploy-wordpress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

namespace: default

labels:

app: wordpress

spec:

replicas: 1

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:5.7

env:

- name: WORDPRESS_DB_HOST

value: mydb

- name: WORDPRESS_DB_NAME

value: wpdb

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: "123456"

resources:

requests:

memory: "128M"

cpu: "200m"

limits:

memory: "512M"

cpu: "400m"

startupProbe:

exec:

command: ['/bin/bash','-c','[ $(find /var/www/html -name "wp-config.ph" >> /dev/null && echo $?) = 0 ]']

initialDelaySeconds:

failureThreshold: 10

periodSeconds: 3

# livenessProbe:

# httpGet:

# path: '/'

# port: 80

# scheme: HTTP

# initialDelaySeconds: 30

# timeoutSeconds: 2

# readinessProbe:

# httpGet:

# path: '/'

# port: 80

# scheme: HTTP

# initialDelaySeconds: 60

# timeoutSeconds: 2